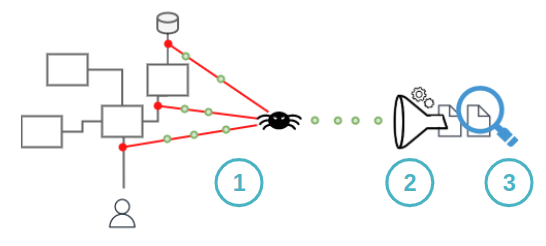

How does it do it?

1. Capture network packets

Spider Whisperers agents are deployed on the servers or services to capture network.

They are attached to the nodes physical or virtual network interface(s).

On Kubernetes, they are even deployed remotely from the UI, using Spider Controllers agents!

Driven by Spider remote configuration, the agents capture raw network packets between systems.

The network packets, their associated TCP sessions and host names are continuously streamed to Spider

server for parsing.

Another set of agents, the Gociphers track OpenSSL secrets used to secure TLS communications, stream them

to Spider server to allow Spider to decipher TLS encrypted communications.

These agents also track the complete network usage of Kubernetes clusters for mapping and discovery.

2. Rebuild communications

The streaming data flow of all agents is parsed on the fly to rebuild higher level communications.

Spider applies filters, templates and tags to enrich communications and remove sensitive information.

The data is then streamed to the search engine to be available for the UI.

3. Offer search and visual analysis tools

The UI makes all communications available for the users, with dynamic filtering and high level visual representations.

All along

During the whole process, agents availability and parsing quality are tracked continuously.

For complete observability and to inform on the completeness and the quality of the parsing.