Replicas reduction after perfomance optimisations

I had a task that had been hanging around for months:

Reducing replicas and servers count as a consequence of all performance improvements.

Indeed, Spider cluster was still in a replicas configuration built when implementing in Node 8, with generators and using NGINX as gateway.

At this moment, I need many replicas to overcome some NGINX flaws under hi load within Docker. Except that now... The cluster is handling between 2x and 3x more load, and constantly!

So I did the work of computing theoretical replicas count, and reducing them until it felt reasonable.

Overall results

And the result is great:

- From 221 cumulated replicas on operational services,

- It got reduced to... 36 ! :) I removed 185 replicas from the cluster!

Globaly, it is interesting to note that removing replicas:

- Reduced drastically the RAM usage, which was the goal: - 14GB

- Decreased alos the total CPU usage.

- I think this is due to less CPU overhead in changing process

Thanks to the memory reduction, I moved from x7 M5 AWS instances to x5 C5, which are cheaper for better CPU (only 4 GB of RAM). I may remove one server still, because the average load is 90% CPU and 2 GB of RAM. But I'll wait some time to be sure.

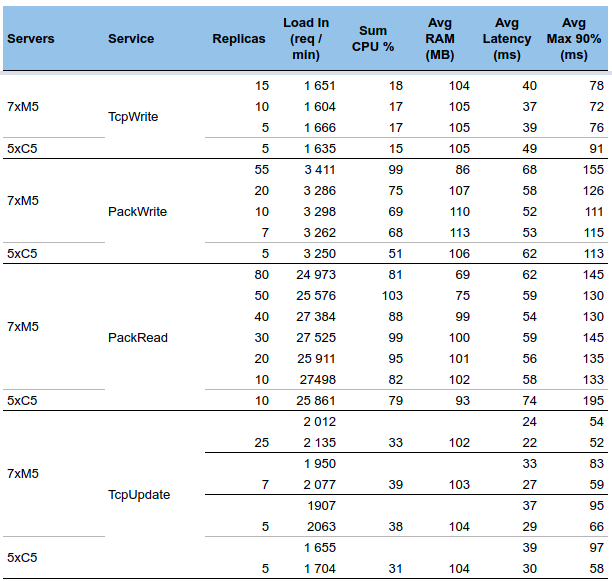

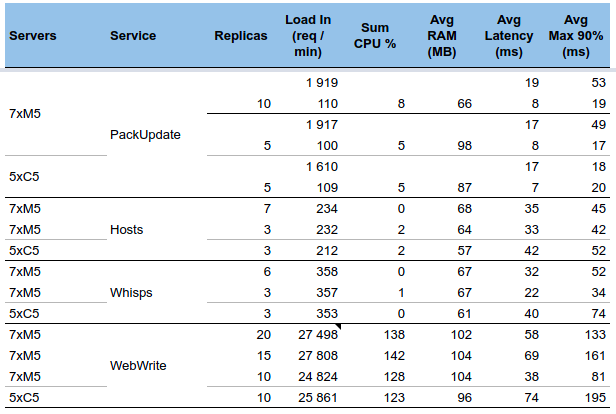

Detailed step by step

Replica reduction was made step by step, and metrics saved for each step.

- You may notice that reducing replicas made services faster !! This couls be linked to the removal of context changes...

- And then, moving from 7 servers to 5 servers increase the latency again. For sure... when a server does almost nothing, it is fastest ;-)

- There was also an intermediate step, not on this table, with 7xC5. But latency almost did not change.

Traefik issue

After reducing replicas, I encountered a strange issue:

There were some sporadic 502 error messages from one service calling another one. But the error message was captured in the caller, the callee did not receive the communications!

And indeed, the issue was in Traefik gateway. The case is not so frequent, but it is due to the big difference of socket timeout between Traefik and Node. Traefik is at 90s, Node... at 5s. Once Node TTL is configured longer that Traefik's the 502 disappeared. Reference: https://github.com/containous/traefik/issues/3237