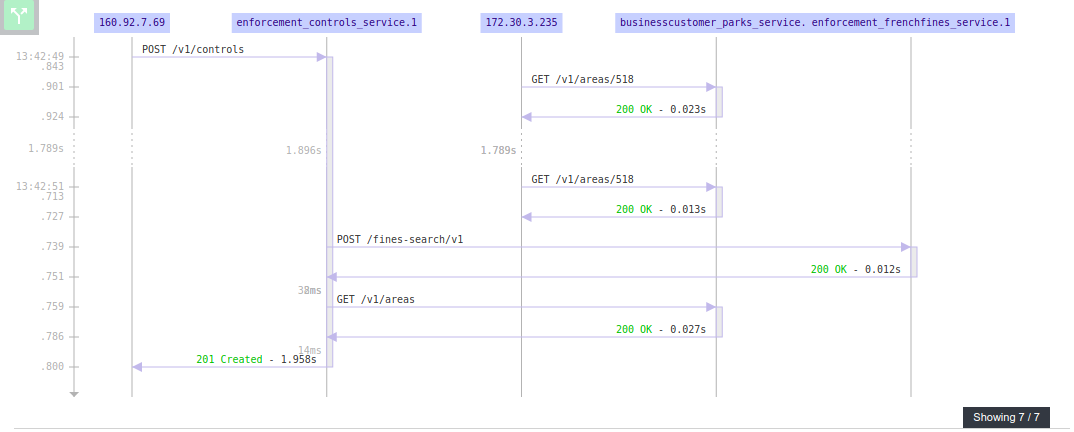

Merging replicas on map

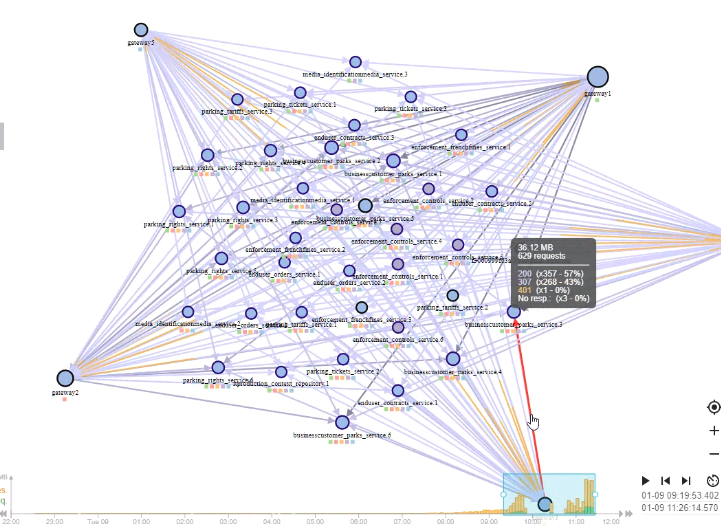

When I saw production map I got afraid. And Allan feedback was on same trend: it is unusable as is. Too many nodes!

So I reacted quick and here comes a new feature: merging replicas. It is now possible to merge replicas of same application on the map.

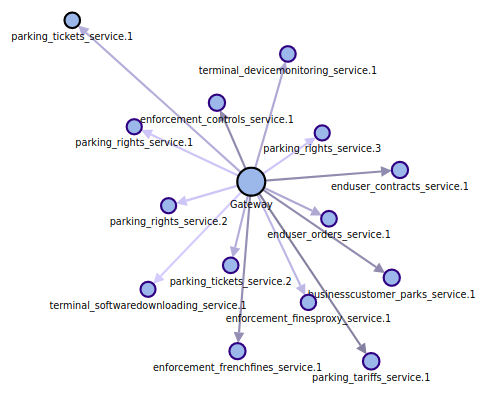

Before (note the multiple instance for tickets and rights):

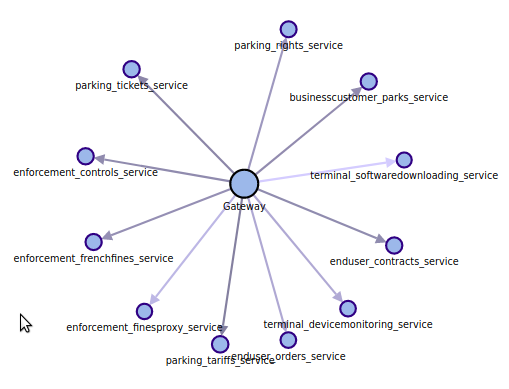

After:

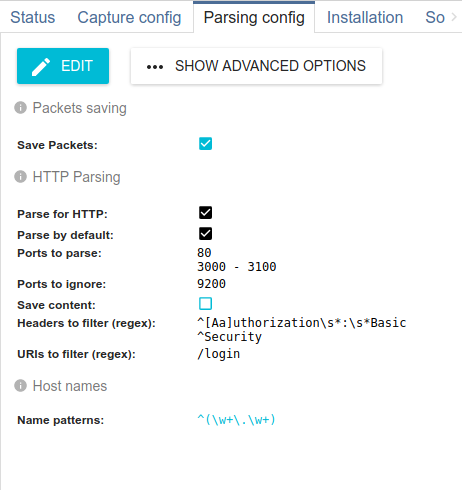

- This is based on finding nodes with the same 'functional name'.

- This functional name is extracted from the node FQDN or the node short name with a regular expression

- Soon to be customizable

- For now: all characters until first '.'

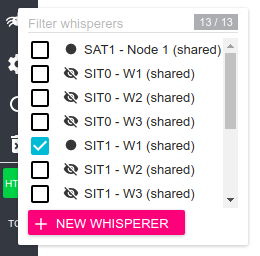

- When the option is active, all nodes with same functional name are merged in a single node, but the features stay the same:

- You can filter on the functional name (all nodes merged)

- You can filter all communications to this functional name

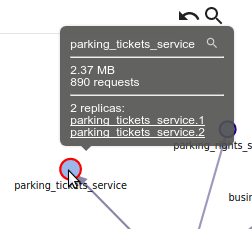

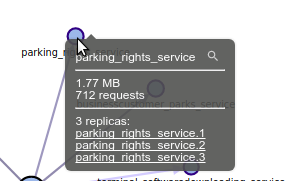

- And what's new:

- You can see how many replicas you have

- And open the replicas one by one in the host view

Allan, Aless, please send me a screen shot of this feature active on production! :)