Reworked Template feature

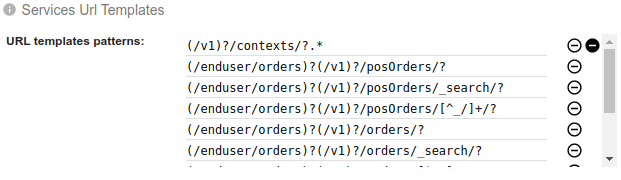

Previous state of URL templates

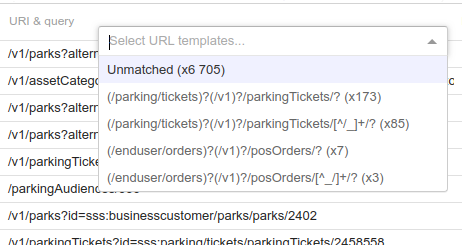

Since many months, it was possible to defined URL templates in user settings to perform URL matching in filtering and stats:

Config

Grid filtering

Grid filtering

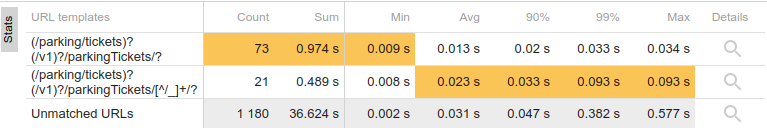

Stats

It worked, but:

- It was a pain to configure - a regular expression for each URL

- It was not very explicit - the regular expression as label

- It was not efficient - the regulare expression were used as filters in Elasticsearch queries, which made Elastic run ALL regular expression on all URIs everytime the filter was selected... :(

- Templates were associated to a user but not a Whisperer or a team

I then did a major rework of the feature to be more efficient, user friendly and... to allow more features to be built on it :)

The new Request Templates!

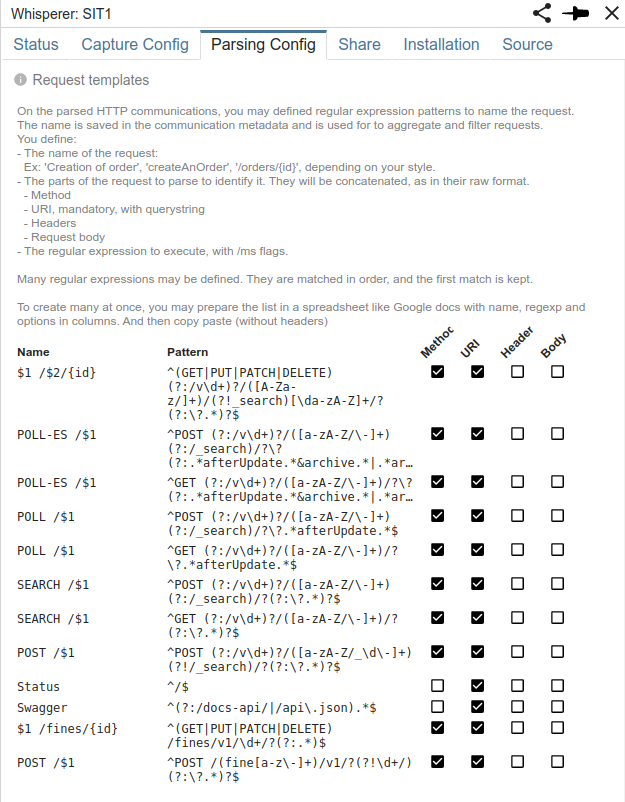

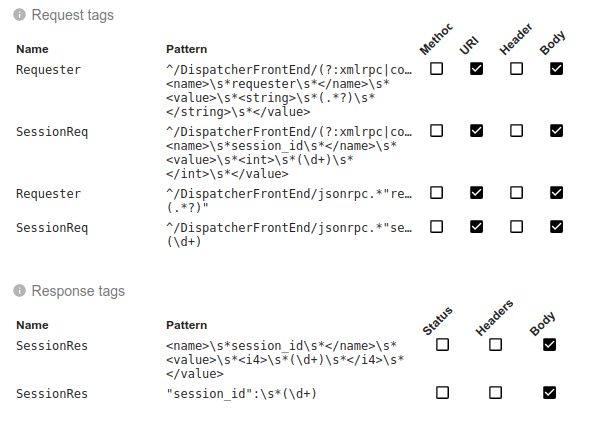

First, you may notice from the name that it is not any more URL templates, but Request templates. Why? Because not only the URL can be analysed, but also the HTTP method, the headers and the body.

You define for each template:

- A name

- The fields to parse

- A regular expression

And Spider associates the HTTP communication to the first matching template while Parsing.

Pros: much faster, flexible, easy to use and extensible. Cons: one time processing only. You cannot change already parsed and templated communications. Testing patterns is complicated, for now.

Look.

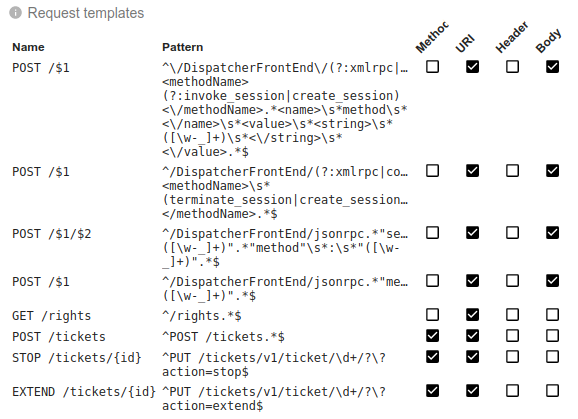

Configuration

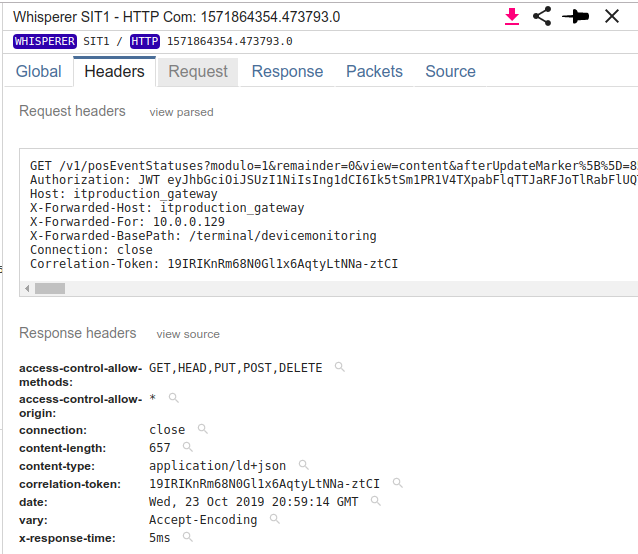

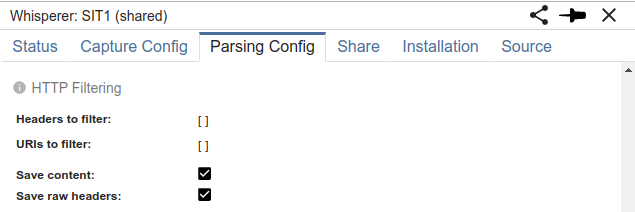

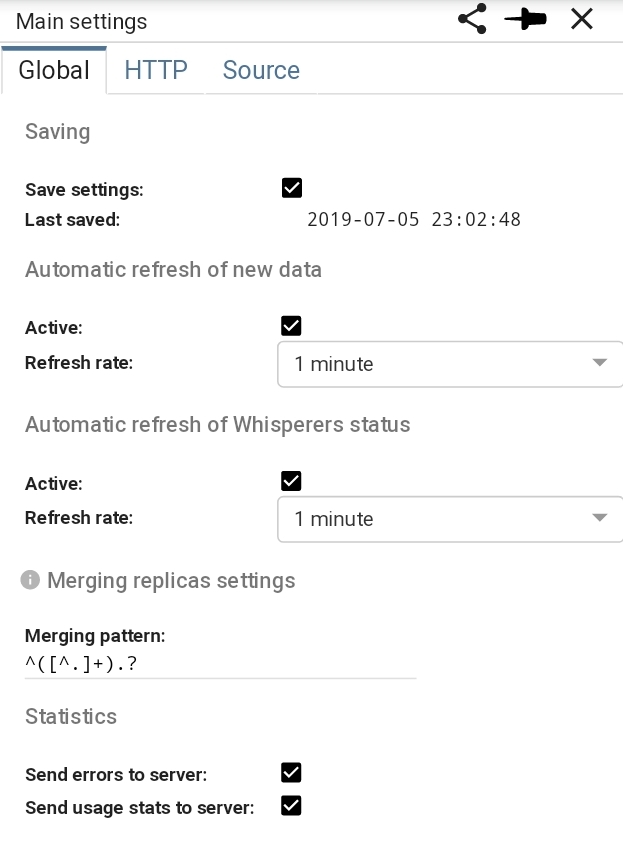

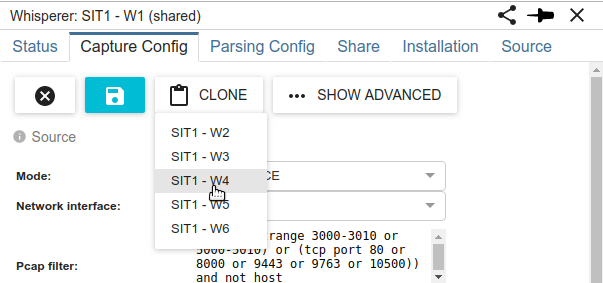

The Request templates are part of Whisperer parsing configuration.

- The regular expression is matched over a combinaison of selected request parts.

- The template name of the first matching template is saved in req.template field of the HTTP communication

- The name can be plain text or include values extracted from the match

- The regular expression is used as a substitution pattern over the name

The regular expressions above are enough to match all (or I believe so) endpoints exposed by Flowbird Streetsmart.

Ex:

For Streetsmart, the configuration is maintained in this Google Sheet. And then I just copy paste to Spider form. Gorgeous :)

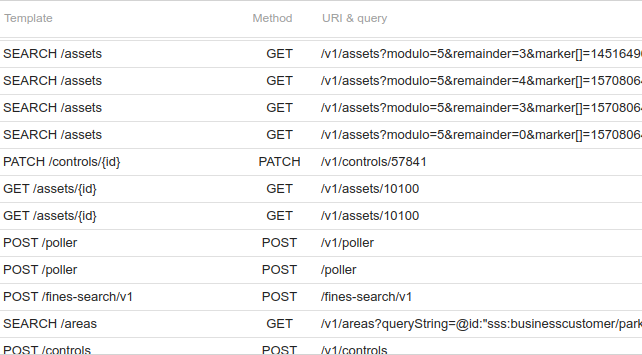

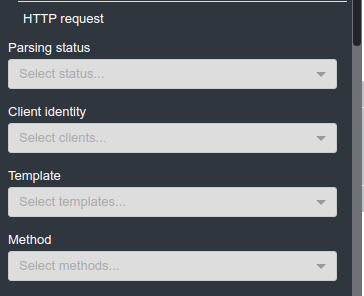

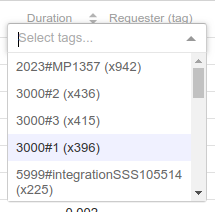

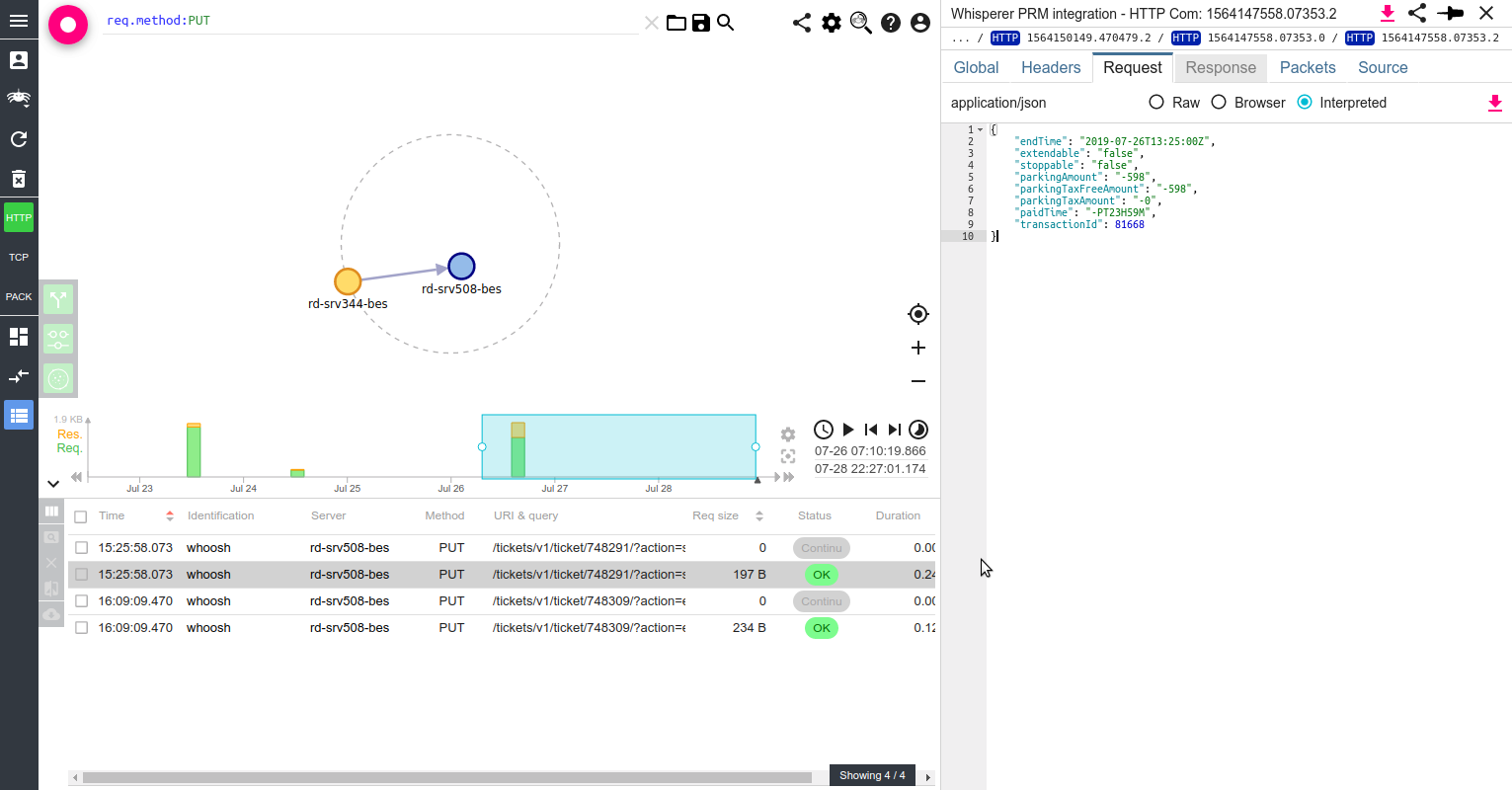

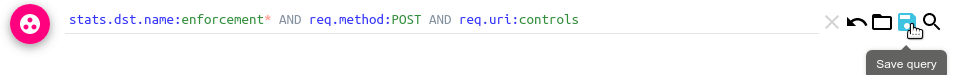

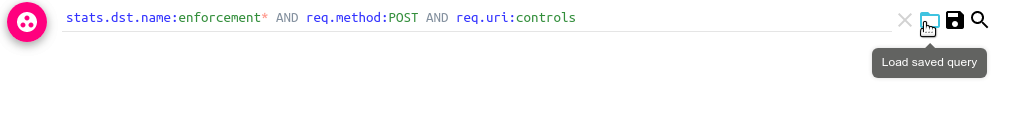

Data filtering

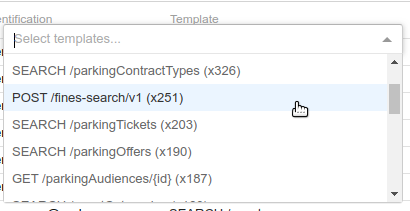

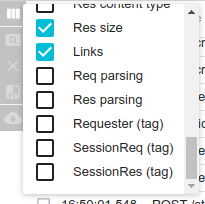

The templates can be filtered in the grid:

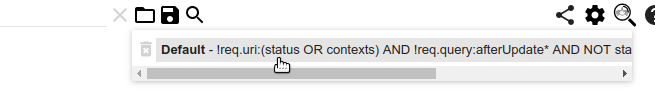

Or in the menu:

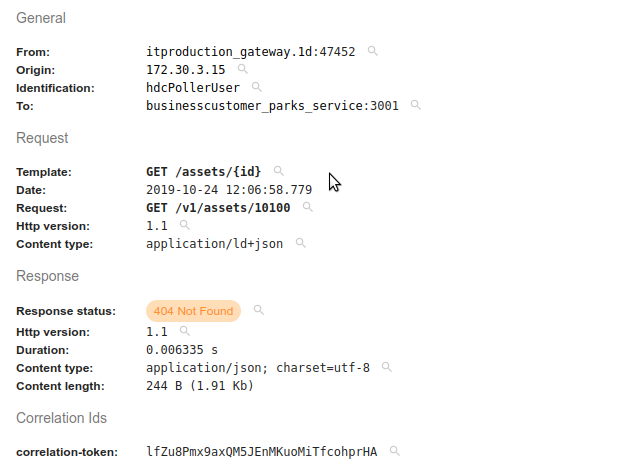

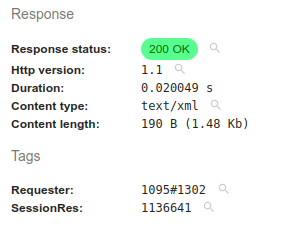

Detail view

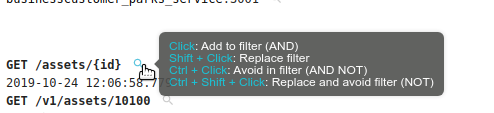

The template associated to the HTTP communication is visible in the detail view, that encountered a small reorder of fields:

As with any other field, you may add or remove the template from the filter with the small glass nearby.

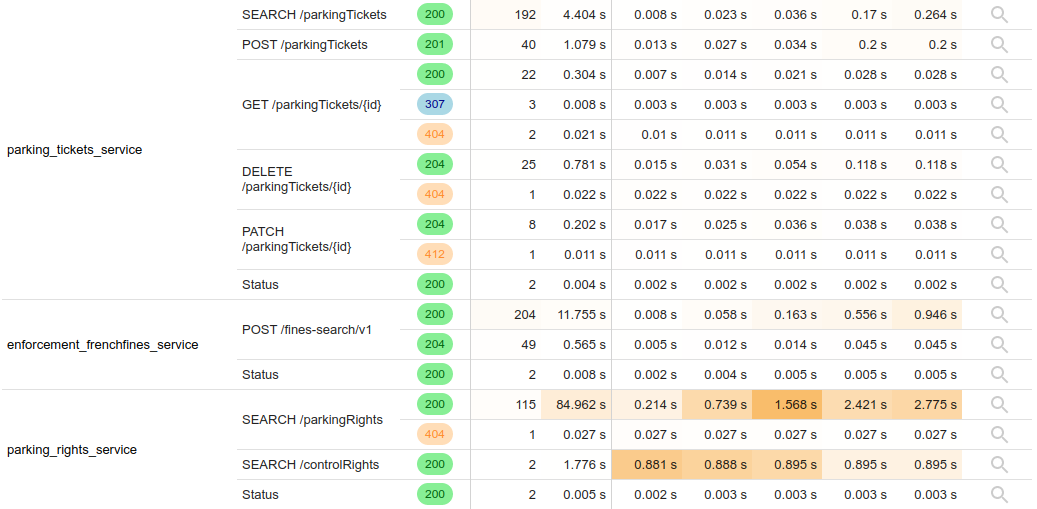

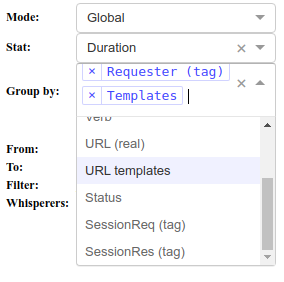

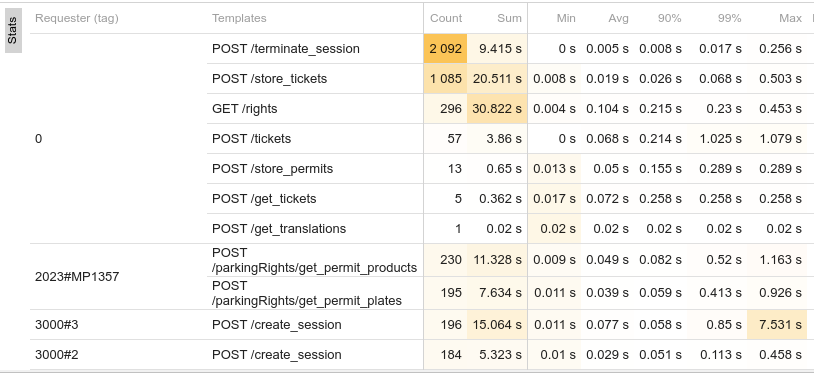

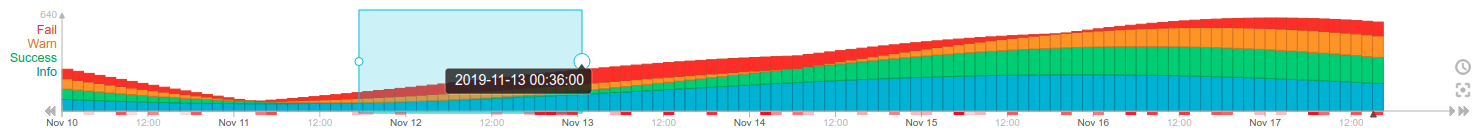

Stats

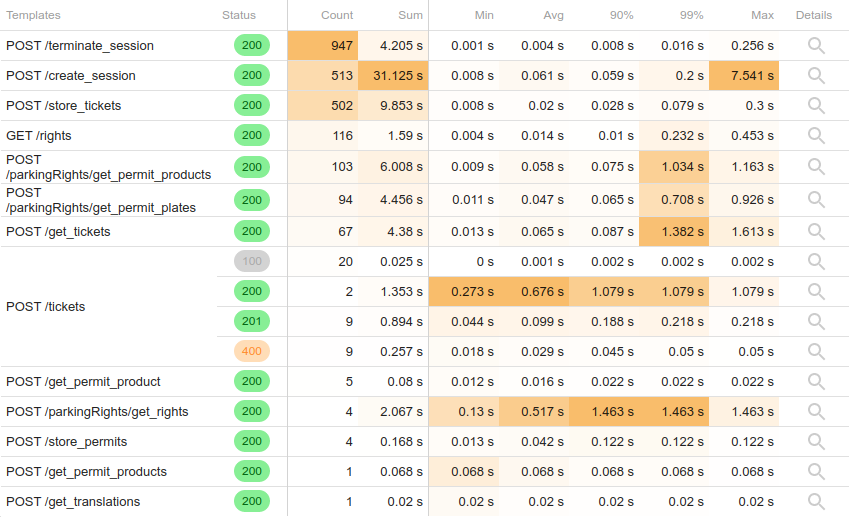

Templates are available as a grouping field in HTTP stats. And they make stats much more readable than previous version:

What's the use of selecting Headers of Body in template configuration?

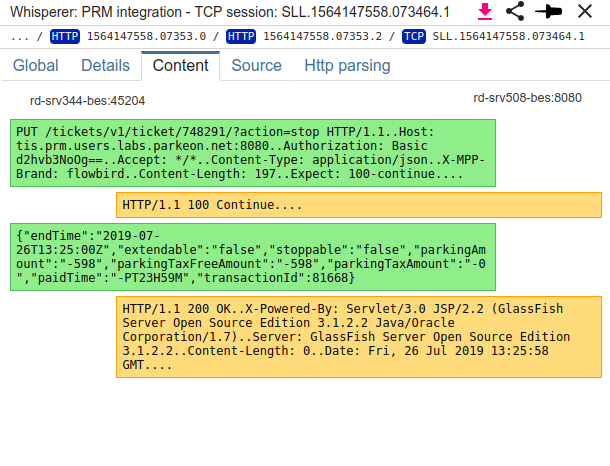

In some cases, like XML or JSONRPC, the URI is not enough to 'name' the request. Then, parsing the headers or the body becomes useful.

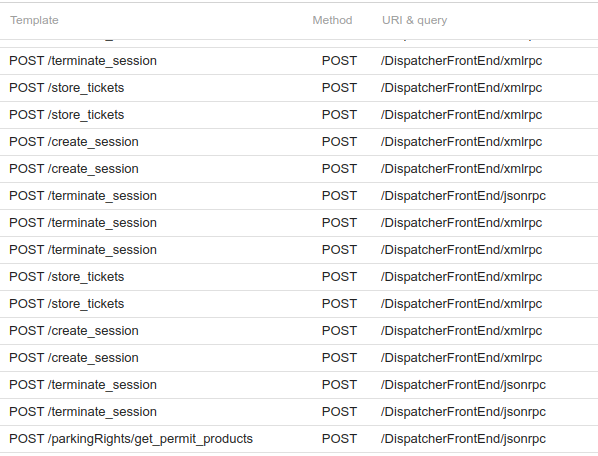

Example with PRM software configuration:

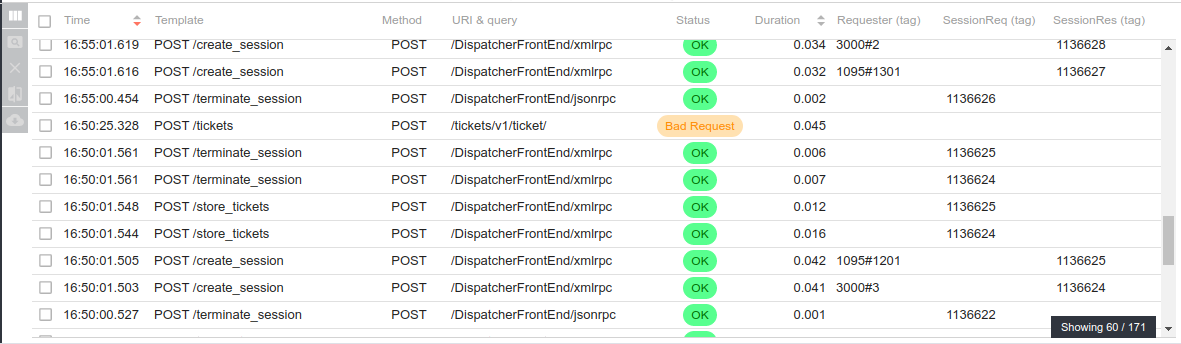

Which makes PRM exchanges much more readable compared to the URI only :) !!

However, this is to use with caution:

- Parsing the headers introduce more memory usage on the server, but this is ok

- Parsing the body implies to decode the body (manipulated as a buffer otherwise) and to uncompress it when it is encoded in Gzip, Deflate or Brotli.

- As Spider is processing around 400 communications /s right now on Streetsmart, that could mean 400 unzipping every second if we have to analyse the body.

- So it must be limited to certain conditions.

I'm thinking of splitting body from the other inputs, to limit body parsing to certain URIs... but I have issue on how to make it still easy to configure.

We can even make stats on PRM:

However... it is difficult to split success and errors when an API always sends 200.

That's when you need Tags in place! They come next!

What's coming next?

Request templates are allowing us to:

- Derive the feature to offer a 'tagging' feature (next blog item in row)

- Enrich the network map display and tooltips (in coming weeks)

- Implement a regular croned request on production to get regular automated stats

Deprecation

Existing URL template feature is deprecated and is going to be removed soon. Should you have issues with this, please tell me.