Log Codes are finally there :)

Log codes

I enforce them in all systems I helped created in my professional work... but completely forgot them when starting Spider. And technical debt...

What? Error codes! Or more specifically Log codes.

And this was painful:

- Log codes help regrouping quickly logs of the same meaning, reason

- This is much useful:

- To discard them

- To analyse a couple of them and check if there are not other errors

- To regroup common issues from several services as one (infra issue)

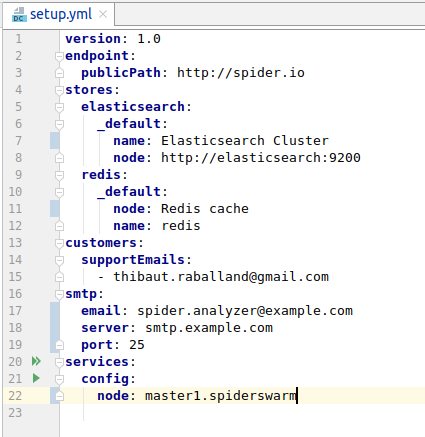

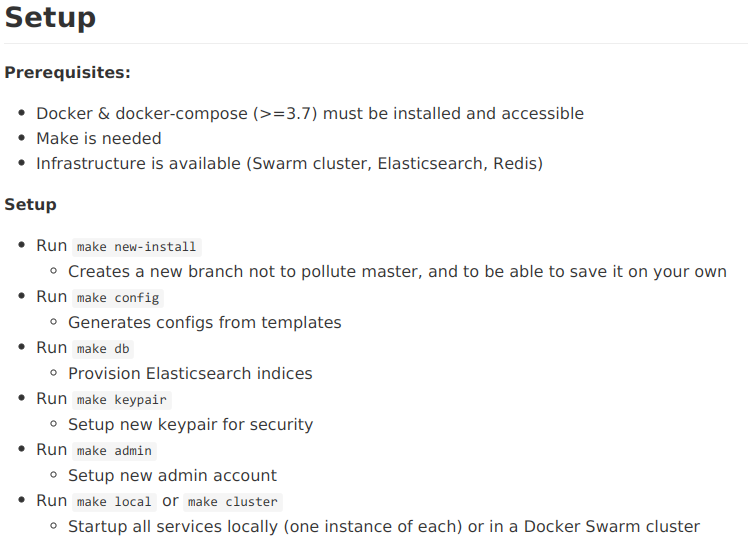

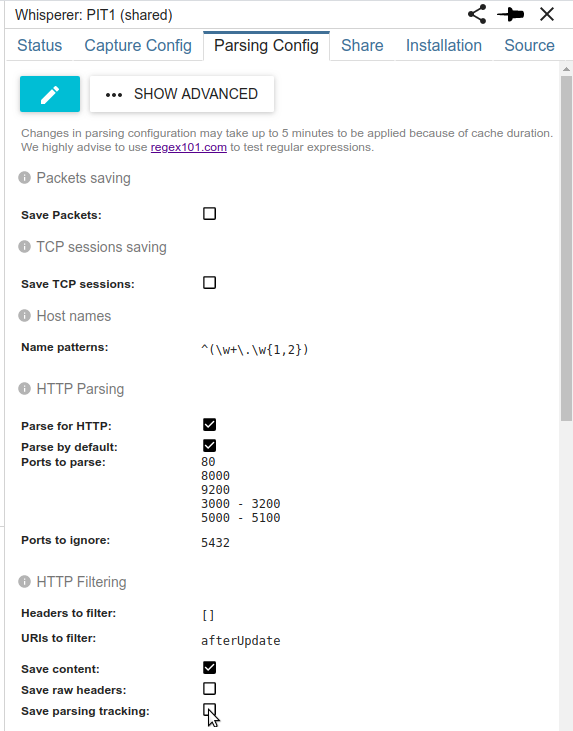

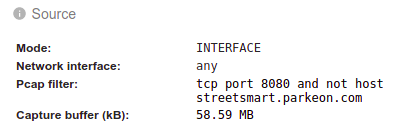

Spider implementation

So, during code refactoring,

- I added Log code to all Spider logs in Info, Warn, Error and Fatal levels.

- Pattern: [Service code]-[Feature]-001+

- Service code is replaced by XXX when the log is in common code

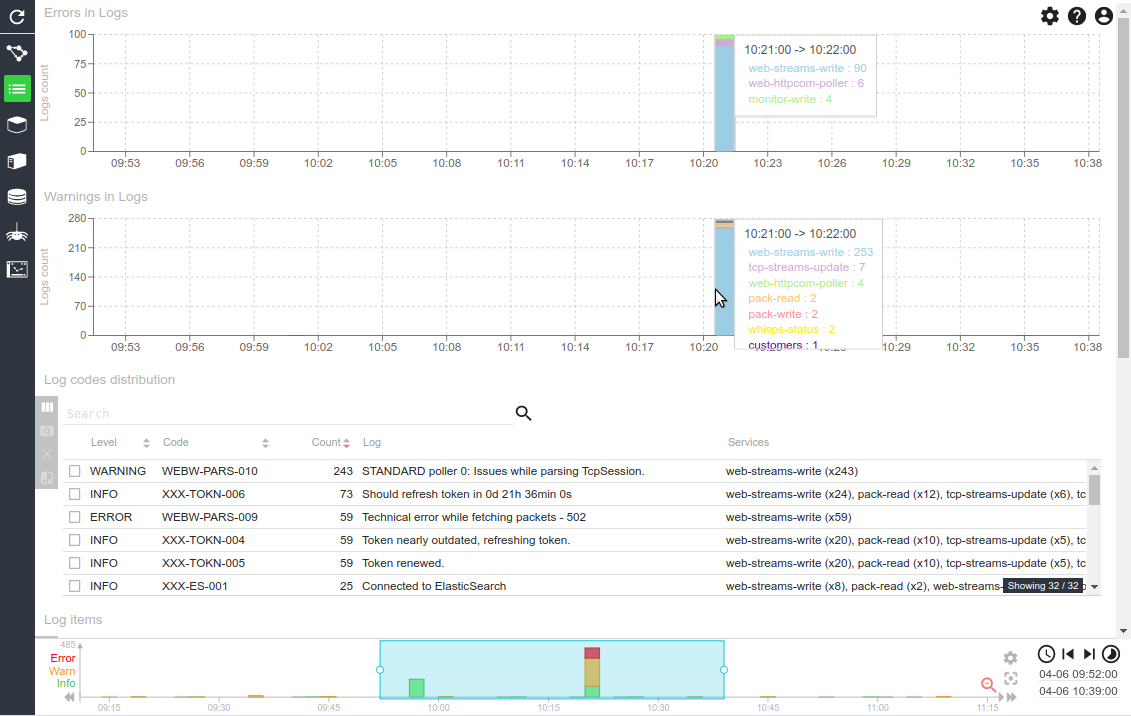

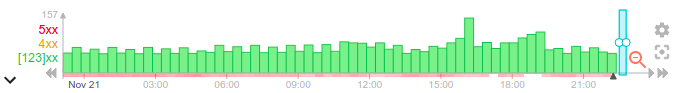

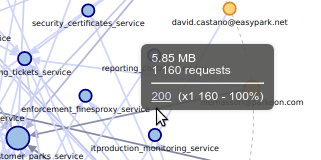

I then added a new grid in monitoring that shows a quick and small analyis report on those logs.

And in one glimpse, I'm able to see what happened and if anything serious need to be looked at in the day before ;) Handy, isn't it? I like it much!

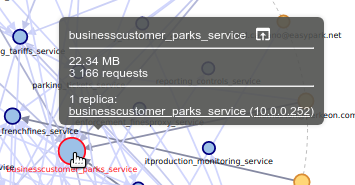

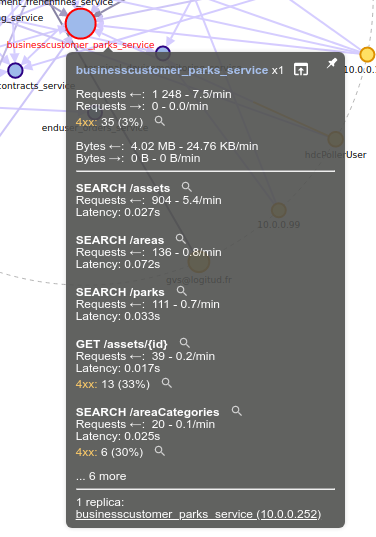

Demo

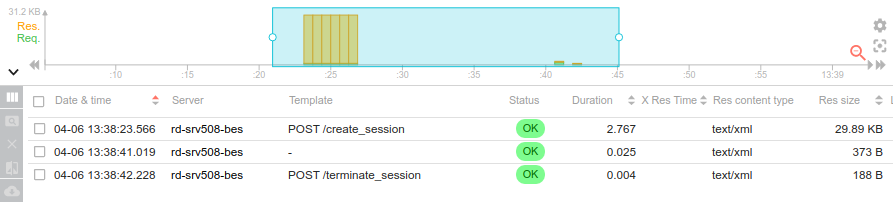

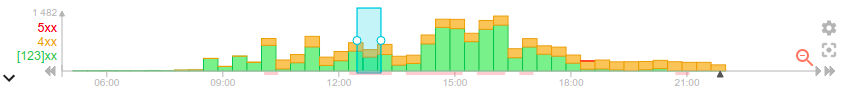

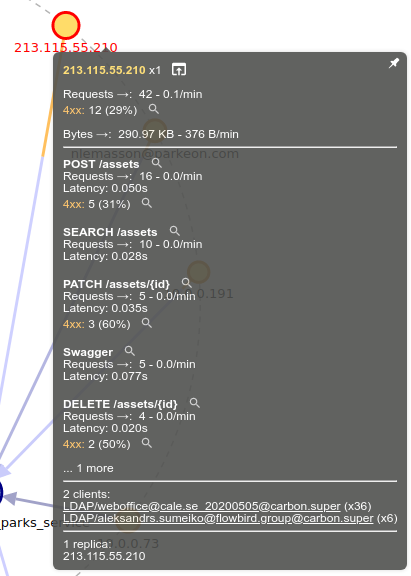

For instance, today, at 11h21:

- Many errors were generated during parsing process

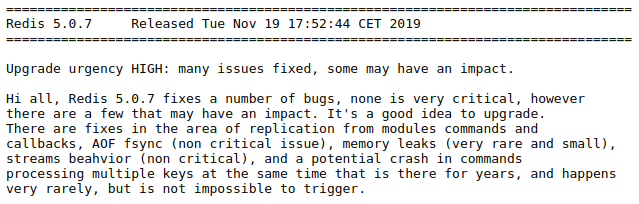

- At the same time 25 services instances reconnected to Elasticsearch and Redis at once...

- This looks like a server restart

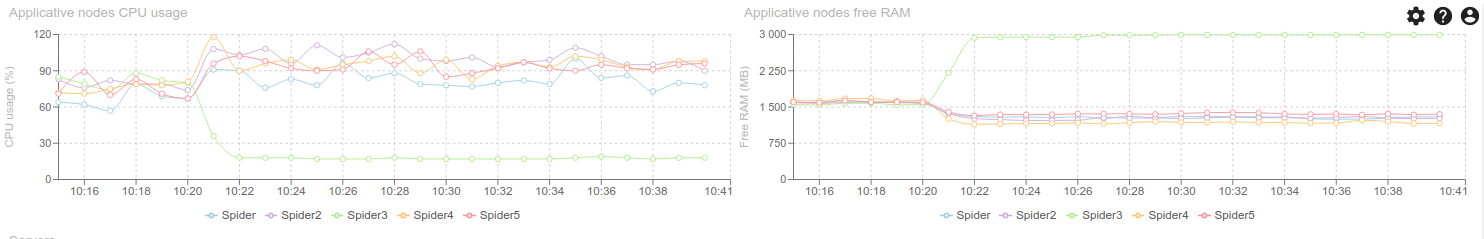

Indeed, looking at Servers stats,

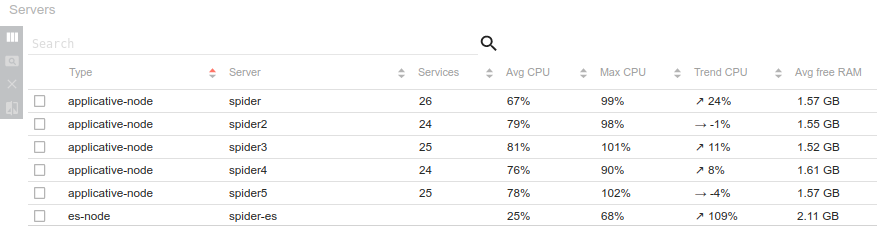

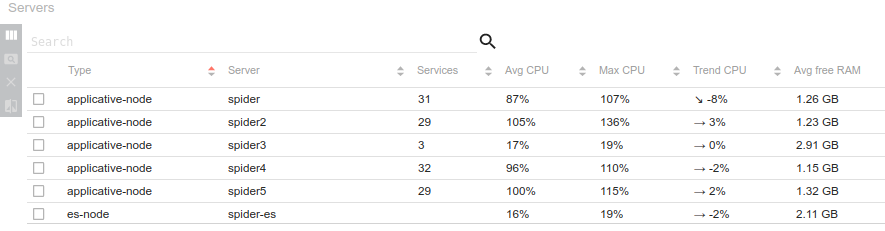

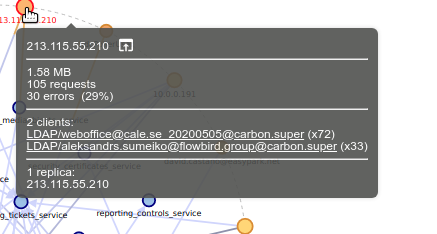

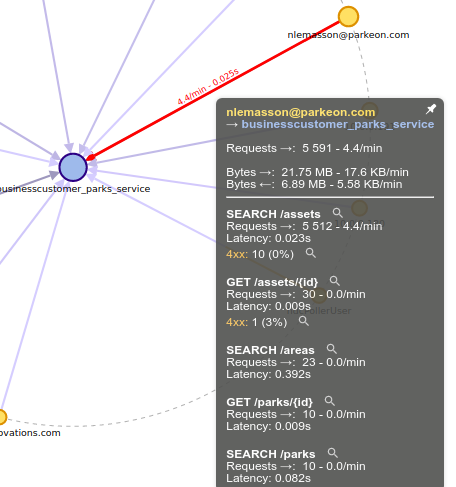

- Spider3 server restarted, and its services were reallocated to other nodes:

- Huge CPU decrease and free RAM increase on Spider 3

- CPU increase and memory decrease on other nodes

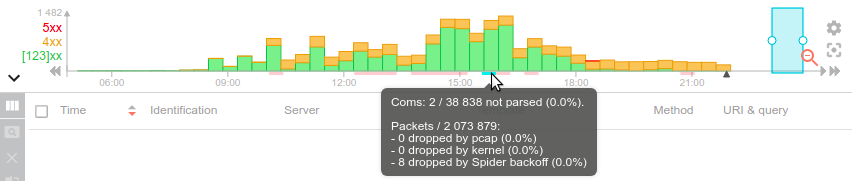

This is confirmed on the grids.

Before

After

Why this restart? Who knows? Some AWS issue maybe... Anyway, who cares ;-) ! The system handled it gracefully.

- I checked on the server itself... but the uptime was days ago. So the server did not restart...

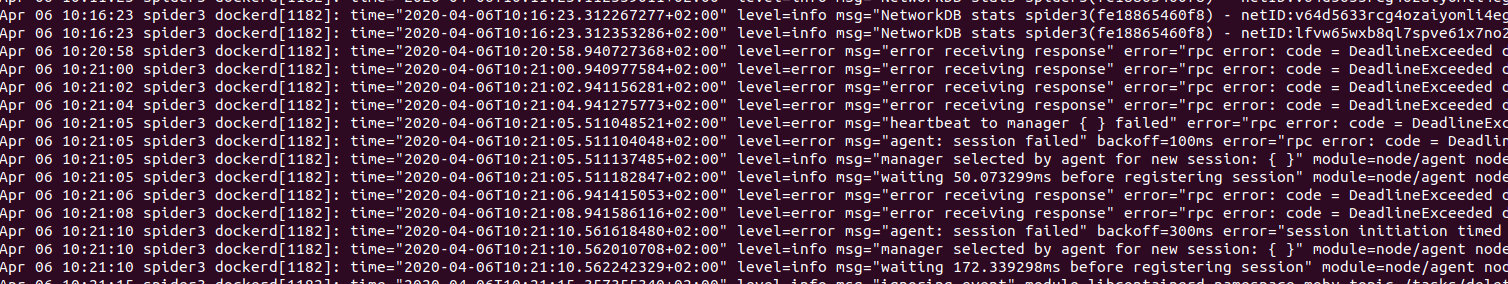

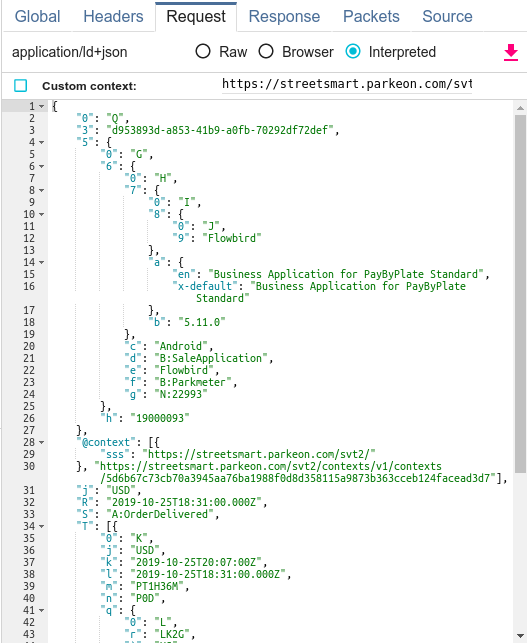

- I then checked on Docker daemon logs (journalctl -u docker.service)

- msg="error receiving response" error="rpc error: code = DeadlineExceeded desc = context deadline exceeded"

- msg="heartbeat to manager failed" error="rpc error: code = DeadlineExceeded desc = context deadline exceeded" method="(*session).heartbeat" module=node/ag

- msg="agent: session failed" backoff=100ms error="rpc error: code = DeadlineExceeded desc = context deadline exceeded" module=node/agent node.id=75fnw7bp17gcyt

- msg="manager selected by agent for new session: " module=node/agent node.id=75fnw7bp17gcytn91d0apaq5t vel=info msg="waiting 50.073299ms before registering session"

Looks like this node had an issue communicating with the Swarm manager for some time, and the services were reallocated... Sounds like a network partition on AWS.

It is told that you have to make sure your architecture is resilient on the Cloud... Done ;-)

Although, I still need to rebalance the services manually... for now :(