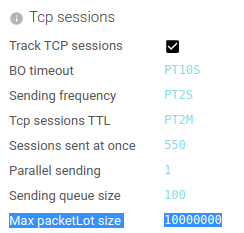

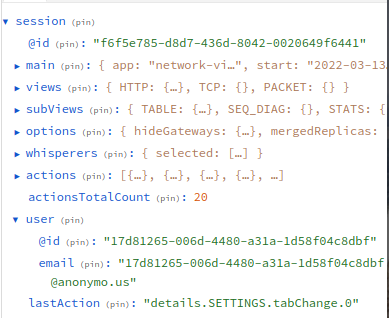

New parameter to protect against too big packetLots

Too protect servers and UI against communications that would be too big, a new protection exists:

When a TCP packetLot gets over a certain limit, the packets are marked as plTooBig to be then avoided in parsing.

- Packet flag is shown on Packet details

- Packet is colored in grey in TCP details

- Parsed communications are market as INCOMPLETE (since they miss packets), and capture status reflects the errors in parsing

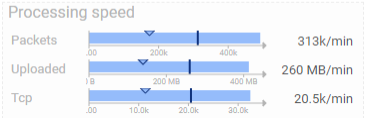

This avoids loading too many subsequent packets in memory for parsing. Default value is set to 10 MB. Which should be quite enough.

Associated Whisperer version is 5.1.0.