Parsing quality

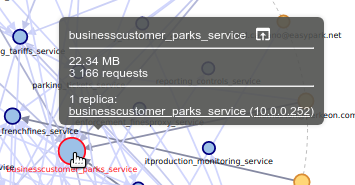

I recently added a new feature to Spider timeline:

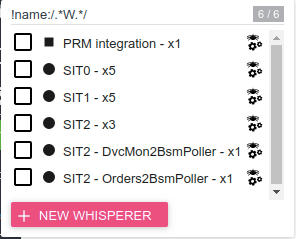

It now shows the parsing quality of Spider for the selected Whisperer(s).

What do I mean by this?

Explanation

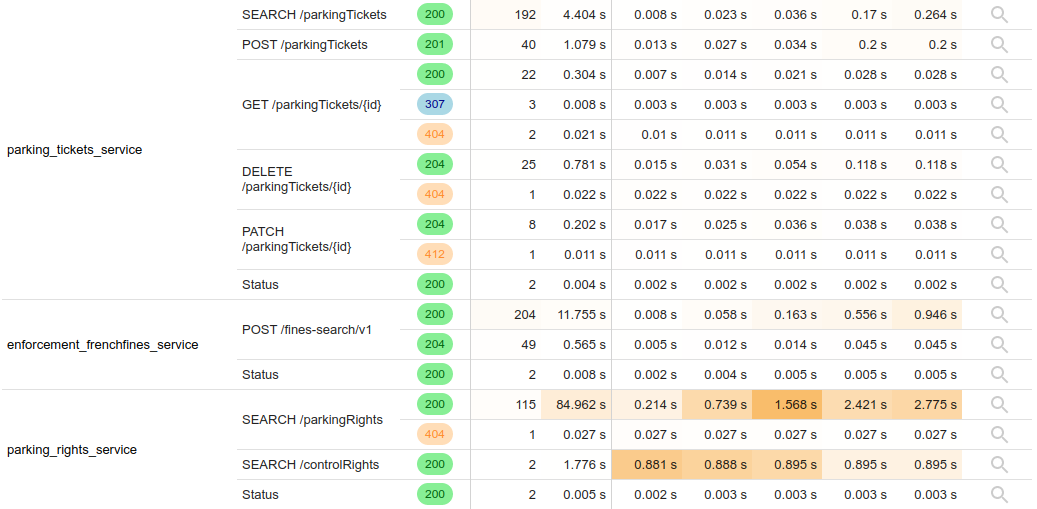

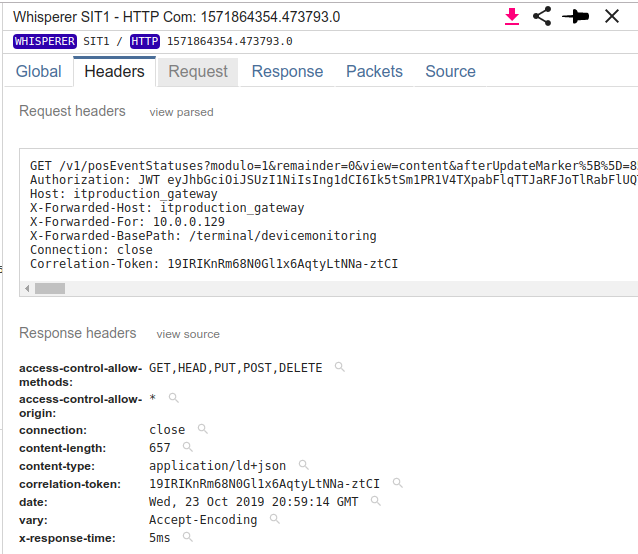

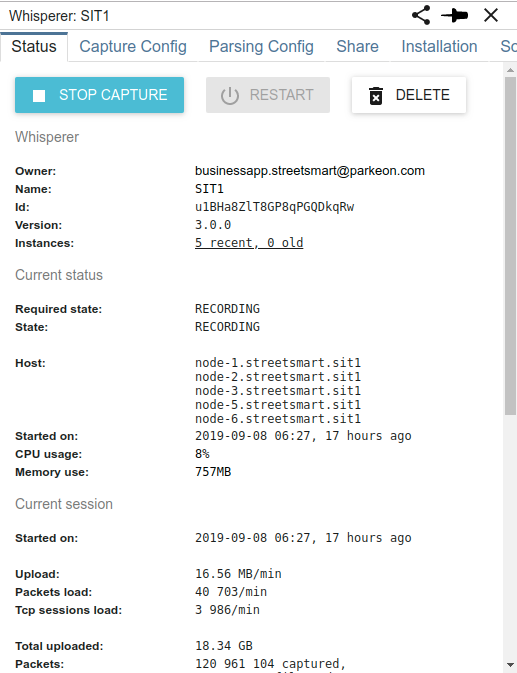

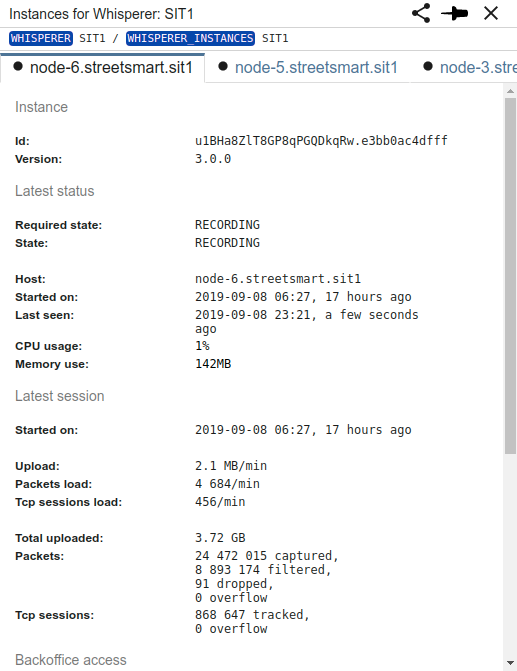

Spider captures packets, then sends them to the server that rebuilds the network communications. Due to the massive amount of packets received (around 4 000/s on Streetsmart platform, 300 million a day), they are processed in real time with many buffers to absorb the spikes.

However, trouble may get in the way in many places when a spike is too big:

- On client side:

- The kernel buffer may get full before pcap process all packets

- The pcap buffer (network capture) may get full as well

- Spider server may not be able to absorb the load, and the Whisperer buffer may overflow (by backoff)

- The same may happen for Tcpsessions tracked on client side... but I never saw this happen.

- On server side:

- Under too much load, or infrastructure issue, the parsing may not find all packets (Redis buffer full) and fail to rebuild the communication

- And there is currently a 'last' bug that happens under high load as well that sometimes packets cannot be found.. although they SHOULD be there (I'm trying to track this one down, but this is not easy)

All in all, there are many places where it may go wrong and that could explain that you don't find the communications you expected!

This information of the goood health or not of the system is available in Spider monitoring, but not so easy to process.

Quality line in timeline

Thus I designed an enhancement of the timeline so that you know when the missing data MAY be a Spider issue.. or if it is indeed due to the system under monitoring ;)

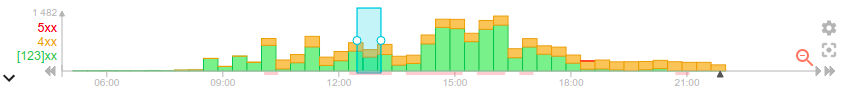

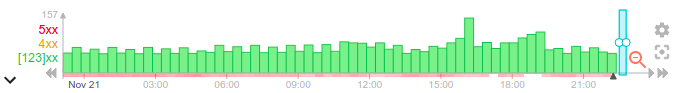

Below X axis, you may see underlined parts in red. They tell you that there have been parsing issues in the period. The redder the worse. (Timeline is showing PIT1 on november 21st)

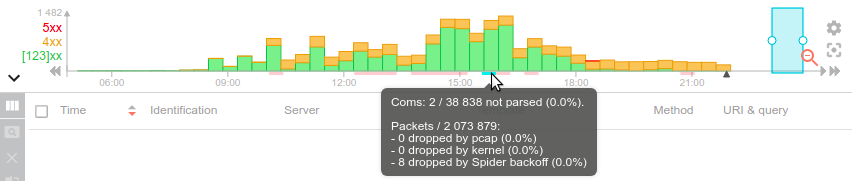

You will then find detailed information when hovering on them:

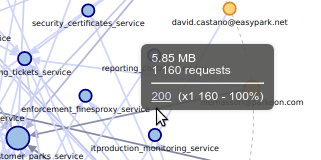

Lots of red !!

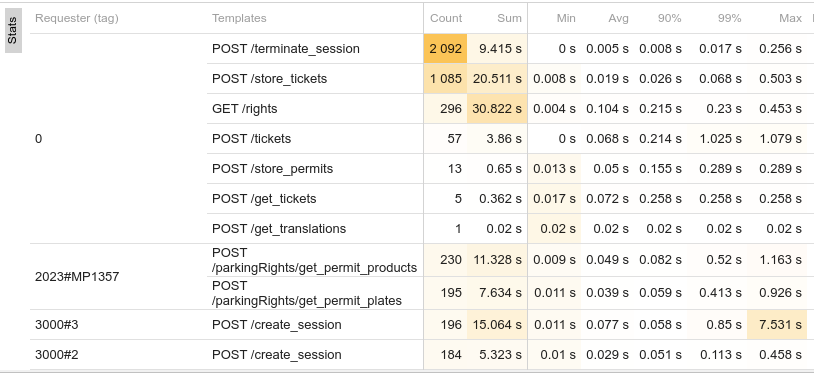

Don't be afraid, the quality is good:

- The light red is the first threshold. Here, we lost 8 packets out of 2 millions! Looks like there has been a surge of load at this time, and Spider could not absorb.

- Over the full day, on PIT1, we could not parse 11 communications out of 2,2 Millions. That's 99.9995% quality!

If failures happens too often, the buffers need to be enlarged, or the server scaled up...

So now you know, and when it gets really red, call me for support ;-)

Hide the line

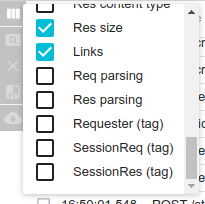

You may hide this quality line - and avoid making the queries - in the timeline menu. This settings is saved in your user settings.

Last info

Last info

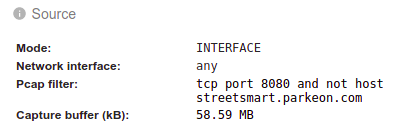

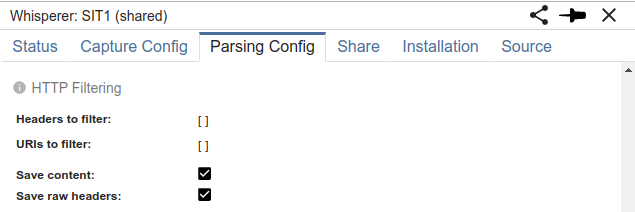

This is PRM integration platform. It is much redder. Indeed, there are many packets lost by pcap. And this is due to the old Ubuntu version!

Indeed, pcap filters are applied after capture on this Ubuntu version, and not before. Thus, the pcap driver captures all packets of all communication in its buffer before filtering and sending them to the Whisperer.

I enlarged pcap buffer from 20 to 60MB on this server, but it is not enough still. If Yvan, you are missing too many communications, this can be the cause, and we can enlarge the buffer again. It is easy to do, it is done on the UI ;-)