Spider Helmchart is available!

Setting up Spider on Kubernetes is now easier than ever with Spider Helmchart.

Context

Since a couple of years, Spider installation has been quite easy.

There was a set of [installation scripts], all packaged in a Docker that were:

- Generating the config from a setup file and templates

- Setting up the databases and indices

- Creating the keys

- Spawning the services

- Creating the administrators

- Etc..

But it required various tools to be installed on the workstation doing the setup: GIT, Docker, sed... And a set of commands that had to be tweaked in case of failures (time out, etc.).

The scripts set was efficient, and when used right, the installation was a couple of minutes. But it required scripts maintenance for any new service or option.

I had several improvements pending:

- Rework of the setup to move index creation/migration in init containers

- Rework to integrate Demo / Dev / Debug options that were done by custom templates (and specific knowledge)

- Adding logic in the custom templating to manage conditions...

All these required specific coding if I would continue this way.

Aside from this, I received a request / requirement from the ops of my first client to create a Helmchart for Spider to integrate it in their GitOps tooling, using Argo CD!

So, even if this development was of quality, and if it saved me hours and hours in setup and upgrade of Spider installations... I decided to move away, and use some standard tooling now available.

Let's work on creating a Helmchart for Spider!

Story

| Step | Description | Duration |

|---|---|---|

| Doc reading | Good practice: read the docs before starting. Learn Helm. | 3h |

| Design | Design around the right patterns: umbrella pattern, tpl function... | 3h |

| Dev | Dev as refactored Helm charts, using Yaml anchors to keep the original setup.yml structure. Reusing index creation script as is in an initcontainer. | 10h |

| Test | And discovery that anchors is a bad idea (... and that it was written in the doc 😕 !! ) | 4h |

| ReWork of architecture | Transformation of values file to use at best Helm templating features and constraints | 4h |

| POC validation | Running first couple of microservices with gateway, redis and elasticsearch. | 2h |

| All services | Configure all services using the same patterns (going at scale) | 6h |

| Tests & fixes | 2h | |

| Demo features | Inject data when Demo flag is set with injected whisperer capturing Spider own communications | 3h |

| Restore from S3 as a post install job | Option to restore the configuration data from a daily backup at installation. Allowing to reinstall without loosing anything. | 3h |

| Deploy & fixes | 2h | |

| Computing hash of secrets | To allow automatic restart.. Trials and failures :( | 5h |

| Documentation | This blog item, setup instructions, helm values reference, json schemas | 7h |

In around 50h of work, spanned in the evening over 4 weeks.

I had the feeling it was going to be long!

But I'm really happy of the resulting quality 😁

Architecture

Umbrella pattern

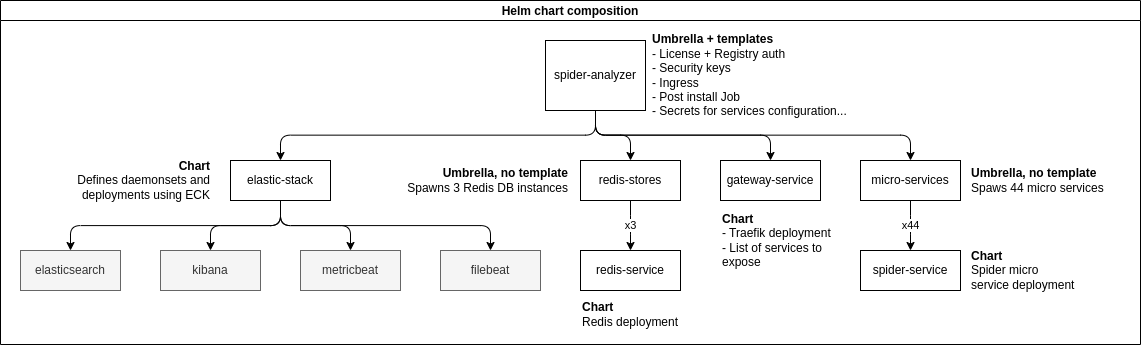

Spider Helm chart is making benefit of Umbrella pattern to compose Spider global application out of many subcharts.

The main Chart is having manifest for main secrets and services configurations.

It also provides the ingress and the post-install Job templates as they are global to all.

Then, elastic stack bricks, redis DB, Traefik gateway and Spider microservices each are in separate charts.

Redis and Spider microservices are also Umbrella charts making use of aliases to instanciate many objects from the same customisable chart.

This nice features allows to have only one Chart to instanciate all deployments, hpa and service for all Spider microservices.

Less templates, less code, easier maintenance 😁!

Tpl function

Spider uses a 'configuration service' providing - in json - the configuration for each microservice.

I needed to find a way to use Helm templating in JSON.

And in fact, it is out of the box! Helm templating works on ANY type of files.

You have two ways to use it:

- either have you template in the

templatesfolder, starting with a_.- That file will be processed by the templating engine, but not sent to Kubernetes as an object.

- Nice... but sad to keep it on this

templatesfolder.

- or... you may use the magical

tplfunction

The tpl function allows you to apply the templating engine on any string that you load.

With a one line code, you can turn a bunch of JSON templates in a configMap that you can integrate in any deployment.

Magic!

apiVersion: v1

kind: Secret

metadata:

name: config-apps

type: Opaque

stringData:

{{- range $path, $bytes := .Files.Glob "config/apps/*.cfg.json" }}

{{ base $path | indent 2 }}: {{ tpl ($.Files.Get $path) $ | fromJson | toJson | quote }}

{{ end }}

I used the fromJson | toJson trick to make sure Helm would blow on my face in case of wrongly generated Json ;)

It is so powerful that I reused it to process and generate my Elasticsearch indices templates!

Global keys

One of the difficulty of the Umbrella is that some values are global and need to be provided to many services... without you wanting to copy the value in many places in the values file.

You may use tpl function to use templating in the values file... but it cannot work with subcharts as they can't access the values of the parent.

That's where the global key comes to the rescue.

In the top level values file, I included a global key for all values I did not want to repeat...

And also for values that 'felt right' in this global semantic scope 😉

global:

# Spider system version to use

# - use 'latest' to be always up-to-date, frequent upgrades

# - use a GIT tag of Spider GIT repo - master branch - to use a specific version. Ex: 2020.04.04

version: 2023.04.11

endpoint:

host: spider.mycompany.io # host where ingress will be deployed

publicPath: http://spider.mycompany.io # endpoint on which the UIs and services will be exposed. Ex: http(s)://path.to.server

ip: "" # ip where server runs. used when running dev / demo whisperers to inject in host file

isDemo: false # true to activate demo features (injected whisperers, team and customers, self capture whisperer)

isDev: false # true to activate dev features (change license server)

license:

key: idOfTheLicense

privateKey: 'PEM' # use simple quotes to protect '\n's

jwt: # Key pair to secure communications (jwt)

privateKey: 'PEM'

publicKey: 'PEM'

Nice!

Structure of values file

I worked much on the values files structure to make it 'understandable'.

Helm is not very flexible when working with subcharts. Their customized values most be under a top level key. I thought that I could play with YAML anchors to work around it, but it failed. YAML parsing is done too many times for anchors to be used as explained in reference documentation.

But I managed to reach an acceptable state, playing with Umbrella pattern (an extra umbrella for Redis stores and Spider microservices).

The final structure, as shown in values.yaml reference is structured in such way:

- Structural configuration

- global keys

- registries

- ingress

- smtp (*)

- extraCA (*)

- elasticsearchStores (*)

- Applicative configuration

- customers

- backup

- alerting

- gui

- tuning

- Infrastructure configuration

- elasticsearch

- redis

- gateway

- spider microservices

(*) I had to move those to global to be able to generate secrets checksum for automatic restart of deployment

Automatic restart of deployments

For helm upgrade to be frictionless, you have to make sure to restart your deployment when files they depend on have changed, like configMaps and secrets.

As Spider microservices are using a 'config' service to get their config, they restart in 1 minute after a configuration change.

No need to bother here.

But some secrets or configMap are not 'watched' by services, and you either have to restart them manually, or find a nice way to do it automatically.

The trick is to use annotations with a value computed based on the hash of the dependency file, as explained in Helm documentation.

However, as my secrets are generated as templates with variables, I cannot just take the file hash!

And as my deployments are in subcharts, I cannot access the data used to generate the secrets.

I tried many things:

Lookups

{{- range .Values.indices }}

{{- $secretObj := (lookup "v1" "Secret" $.Release.Namespace "config-elasticsearch") | default (dict "data" (dict)) }}

checksum/{{.}}{{ get $secretObj.data . | sha256sum }}

{{- end }}

The idea was to generate the hash from the secret values in the Kube!

It works... except that the secret I access then... is the old one.

So I will detect changes too late :(

Adding hash in global

{{- $_ := set $.Values.global "extraCaCertsHash" (.Values.s3Backup.extraCaCerts | sha256sum) }}

{{- $_ = set $.Values.global "smtpHash" (.Values.smtp | toYaml | sha256sum) }}

The idea was to compute the hash in the Umbrella and make it available in .Values.global for the subcharts.

Nice!

Except that.. changes to .Values.global are not propagated to subcharts 😕

Restructuring Values

I search on the web what others are doing...

And I finally decided to restructure the Values file to add dependencies in the global part.

But instead of computing hash of the file, I computed hash of the variable part of the file, coming from the values.

#### values.yaml

podAnnotations:

checksum/extra-ca: '{{ .Values.global.extraCaCerts | sha256sum }}'

#### deployment.yaml

{{- range keys .Values.podAnnotations }}

{{ . }}: {{ tpl (get $.Values.podAnnotations . ) $ }}

{{- end }}

Helm repository

Once you have a helm chart, you need to expose it.

In a secured way when writing to it, but with a public access when reading it (choice).

I could have put it all in a S3 bucket and manage rights with S3:

But this would mean managing developers rights with AWS IAM. I wanted to skip this.

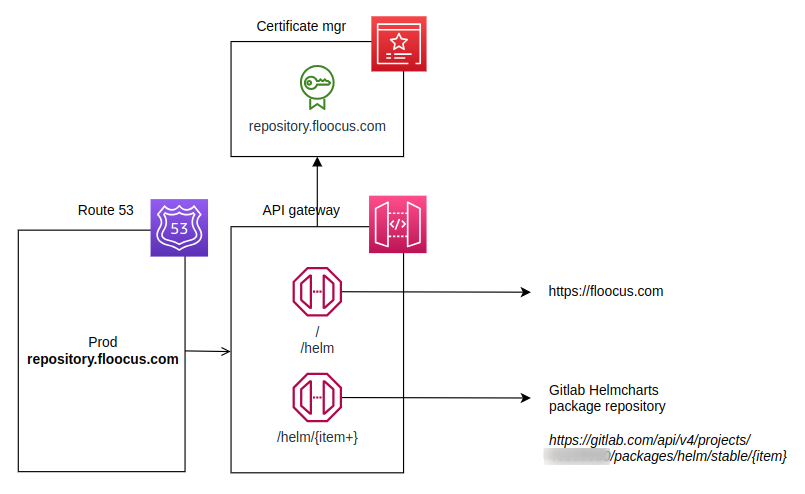

Then I thought of a CQRS way of doing it:

- Using Gitlab Helm repository with Gitlab access rights when publishing the charts

- And for development charts access.

- And exposing Gitlab Helm repo with AWS API gateway for a neat (nice URL) public access

1h of Cloudformation later, it was ready and working under https://repository.floocus.com/helm 😀

Extra features 😁

Demo

In previous setup, I had specific docker-compose or Kube templates to generate a Demo platform.

The demo mode includes:

- A set of users with different rights

- A team

- A set of whisperers, among which, one is capturing several services of Spider itself

With Helm, I managed quite easily to incorporate the Demo mode inside the official Helm chart.

- Setting

global.isDemototruedeploys the above configuration objects and you may see Spider capturing itself. - When setting it back to

falseremoves any demo artifact.

This one done only by conditional templating of services deployment and init configuration for indices.

Neat!

Dev & debug

Similarly, I had a different set of templates for Development and Debugging:

- Mount the source code in the containers for easy reloading and debugging

- Expose some debug port as a NodePort

- Use a different license server

- ...

Now, all can be set in the values file with global.isDev and some specific keys in the microservices subcharts.

customer:

name: customer

functionalName: customers

image:

name: customers

options:

privateKey: true

##### dev options :-)

dev:

mountSrc: false

debug: false

debugPort: 30229

####

autoscaling:

min: 1

max: 20

cpuThreshold: 300m

resources:

requests:

cpu: 2m

memory: 100M

indices:

- users_1_customers.lst

Gorgeous!

Documentation

Links to official documentation: