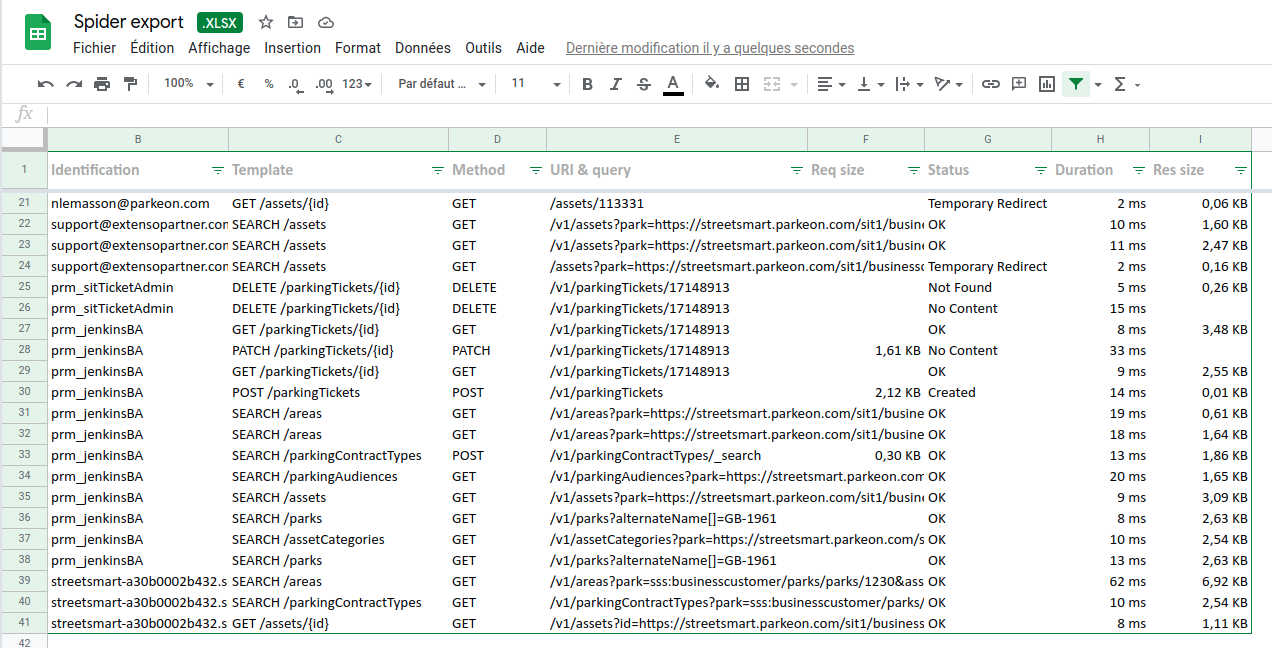

Excel export

Another Bertrand request: you may now export the grid to an Excel file. All settings made to the grid are transferred to the Excel output:

- Selected columns

- Size of columns

- Duration unit

- And nice formatters

It is ready with predefined filter area and some metadata from the current search filters on Spider.

Fullscreen grid / sequence diagram or stats

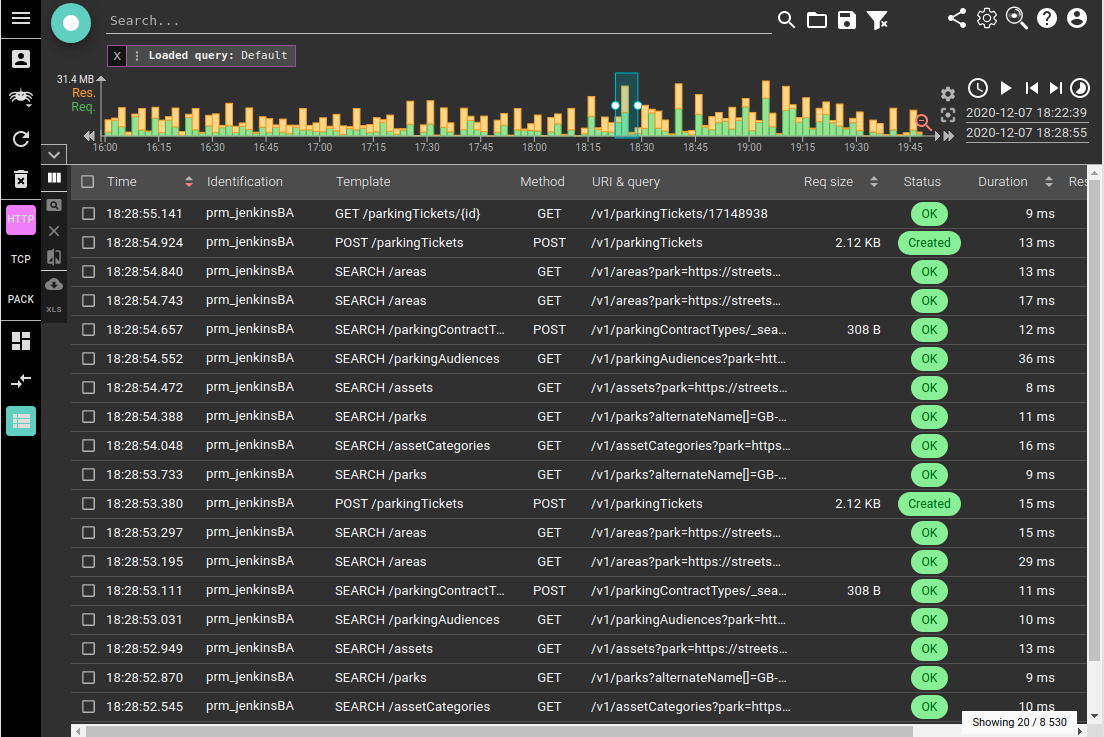

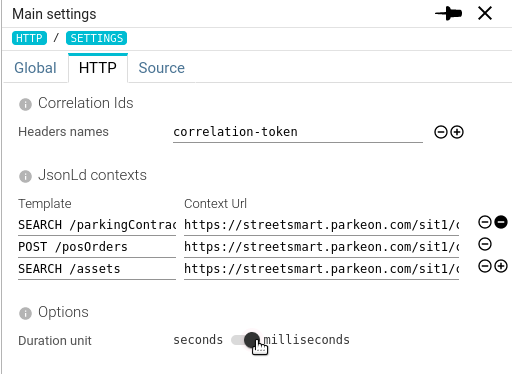

HTTP duration in milliseconds

Requested by Michal K, Bertrand and who else, I added the possibility to choose the unit for the HTTP durations, between seconds and milliseconds.

I may look simple, but it impacts many places where i had to inject a single component (hopefully) to update all at once:

- Grid

- Sequence diagram

- Details tab

- Diff tab

- Filter histogram

- Map tooltips and arrow legend

React feels like magic: all are updated with a single switch :-D

I did not do it in stats. Too much work in current stats framework for low value.

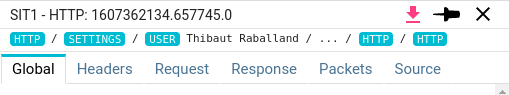

Neater breadcrumb

As considered in Streetsmart, showing the resource name in breadcrumb is not always the best, especially for generated resources.

Thus I improved the breadcrumb of Spider to display name of resource only when intelligible, and by always keeping first and previous item accessible

Try and tell me what you think! :)

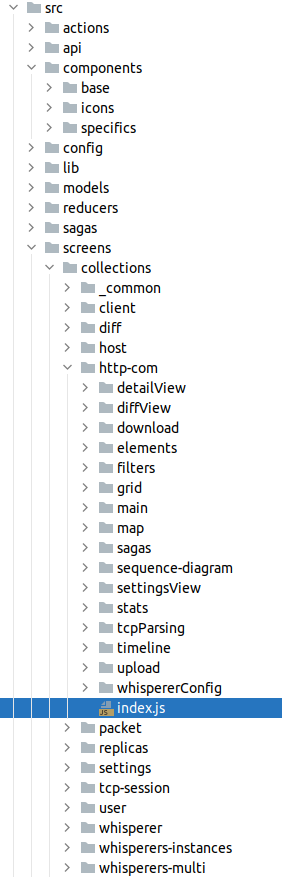

Network view UI internal upgrade

Following the work on Monitoring UI, I upgraded main UI.

- 2020-08 - Restructure of code for better maintenance.

- 2020-11 - Migration finished to latest versions of React, Redux, Material UI v4, and migration to Hooks.

- 2020-11 - Refactor of all code to have all display and processing related to a protocol in a single folder, loaded by dependency injection in the core of the application: Components, tabs, UI parts, actions, sagas...

Result:

- Components are regrouped in

- base components, that can be shared across UIs, and agnostic to this specific UI

- specifics components, that are coupled to the state structure or reall business specific

- All application specific work is located in screens folder

- includes the app initialization and state recovery

- includes the screen structure and coupling to the theme

- includes containers to couple base components to the state

- includes a collections folder in which each collection include the specific for a resource:

- details view

- diff view

- download package builder

- upload parser

- filters definition

- grid and excel columns definition

- map nodes and links definition

- sequence diagram links building rules

- stats definition

- timeline configurations

- settings tab

- whisperer parsing config options

- custom sagas if needed

The structure is much better, as well as troubleshooting :) I refactored / rewrote much of the code that dated from my starting months with React / Redux. I could still be improved, but it is much better structured and architectured! :)

HOW MUCH I LEARNED building Spider !!

Next step: extract the protocols folders into plug-ins. But this is another story!

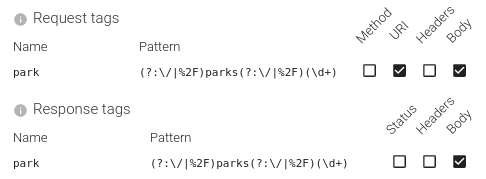

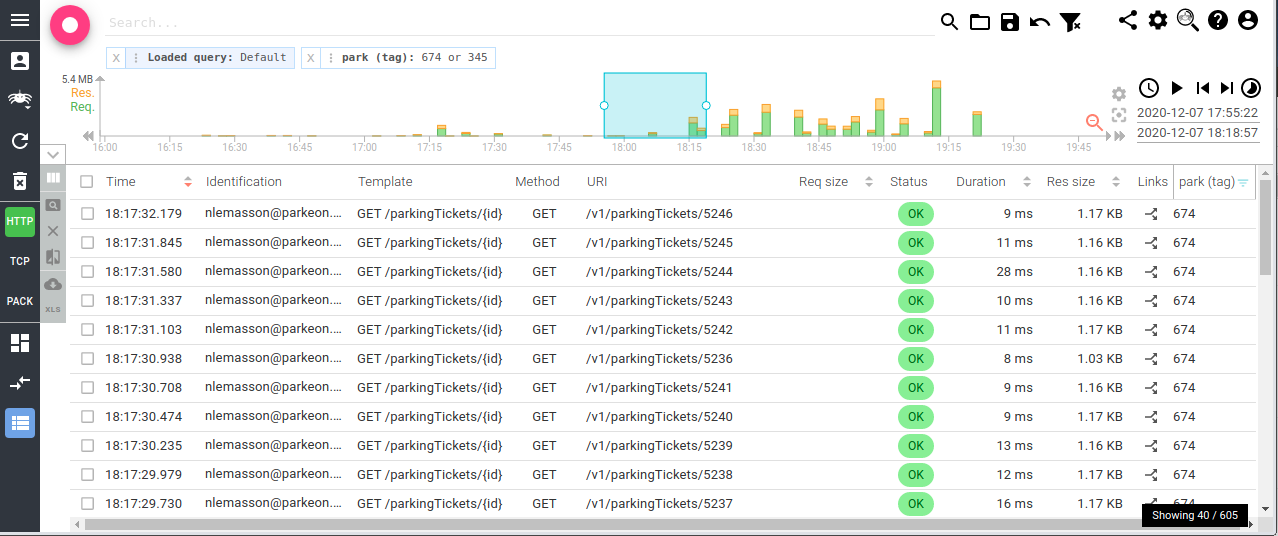

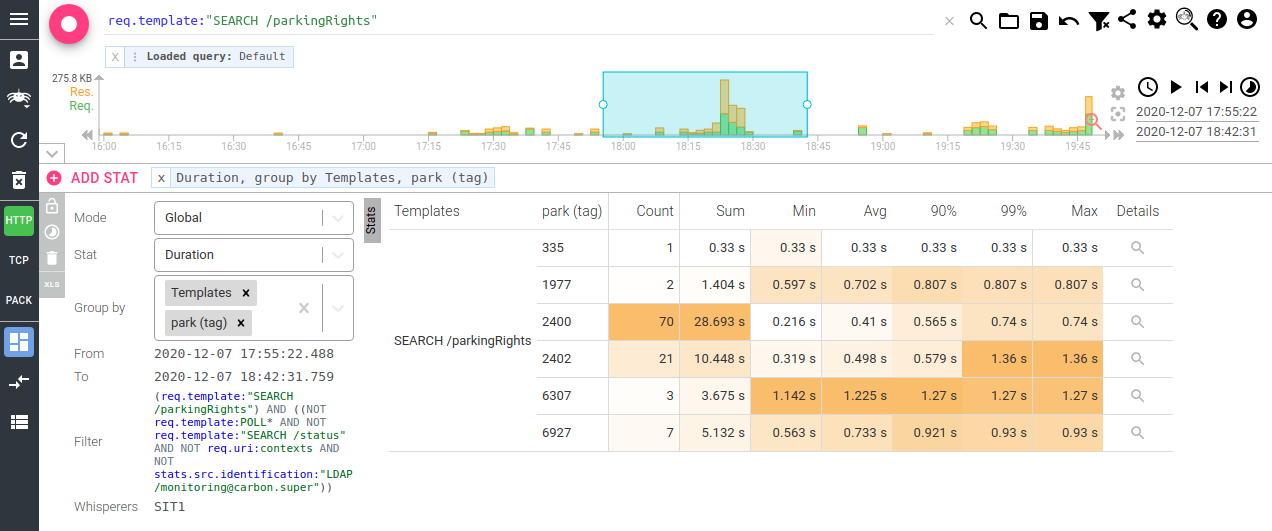

Tags rework & use case - Filter on Park identifier !!

I rework Tags features to merge request and response tags into one result. Indeed, after using it, it offers better UX.

For instance, in Spider, you may defined Park id extractor:

These regular experssions will instruct Spider to extract the internal id of the parks referenced in the communications, and to save them in park Tag.

Spider will then, when parsing, unzip the communications, run the regular expressions and extract the values.

The park column can then be added to the grid and filtered. Thus adding a nice filtering criteria when working!

Last, but not least, you may even do stats! For instance, let's compare the latency of parkingRights call depending on the park configuration:

That's amazing !! :)

Visual help - resize handlers

Alerting

New independent alert service

After much study of Prometheus alert manager and some other solutions, I decided it would be faster and cheaper in resources and time to implement a basic alerting service to start with :) Especially the security integration...

So I did it. A new service (45MB - 0% CPU) is checking various metrics from the monitoring and sending mail to administrators in case of issues. And it sends again after some time if the problem is not solved.

Complex rules can be written... as it is code ;-) Took a couple of days, and works like a charm! Of course, it is - itself - monitored in the monitoring, & integrated in the automated setup ;)

Implemented probes

- ES healthcheck

- Redis healthcheck

- Change in infrastructure (increase or removal of nodes)

- Low ES free space

- No new status (Whisperers down)

- Too many logs over last minute

Of course, alert are sent when the probe cannot work.

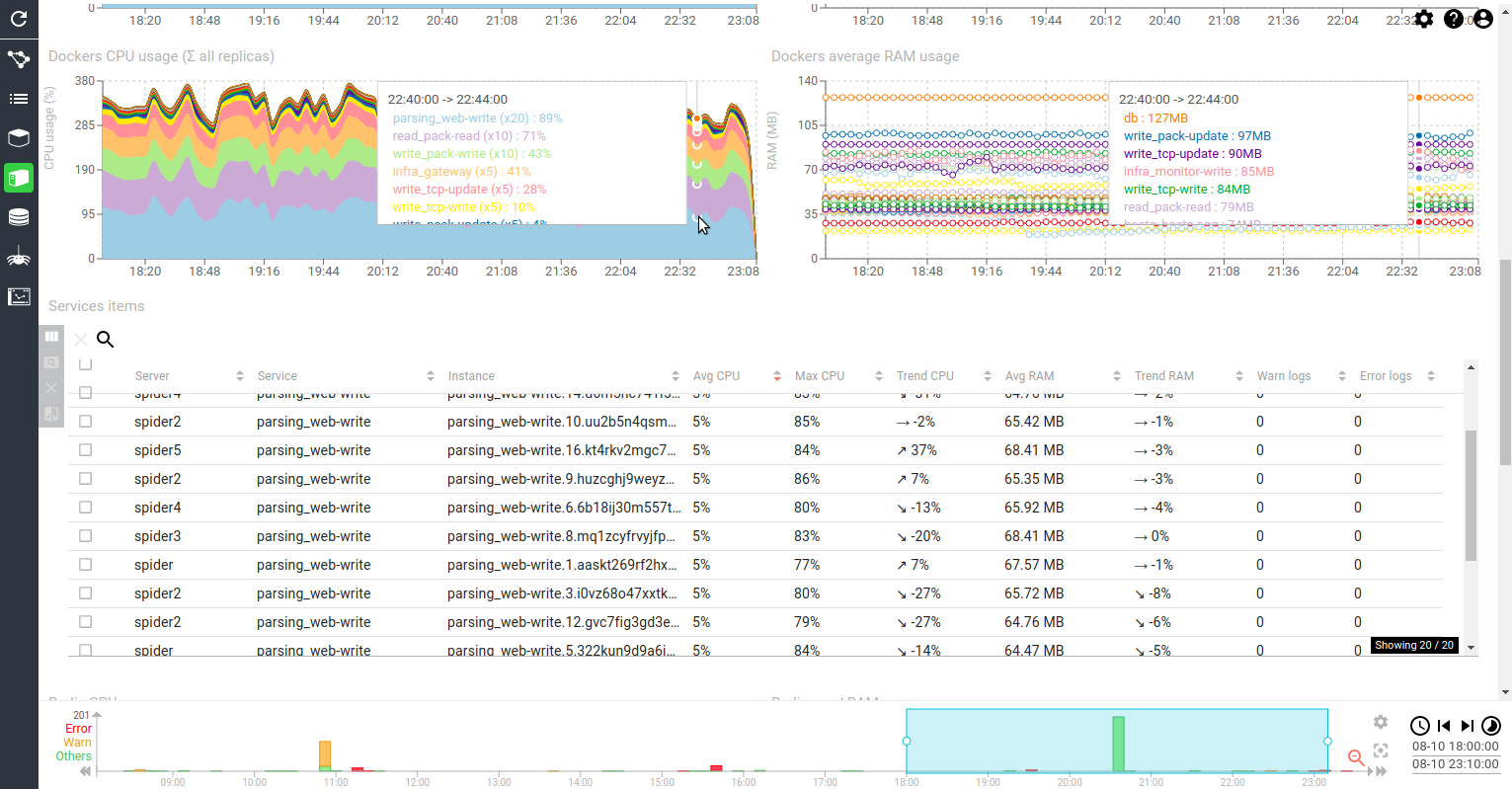

Monitoring GUI upgrade

I started a BIG and LONG task: removing the technical debt on the UI side:

- Libraries updates

- Moving React to function base and hooks whenever possible

- Refactoring

- Material UI upgrade + CSS-in-JS + theming approach

First application to be reworked: Monitoring UI. It allowed me to start with a full application, while doing common components with Networkview, while not struggling with the complexity of the latter one.

Timeline component was refactored too (https://www.npmjs.com/package/@spider-analyzer/timeline), which leads to a much easier maintenance :)

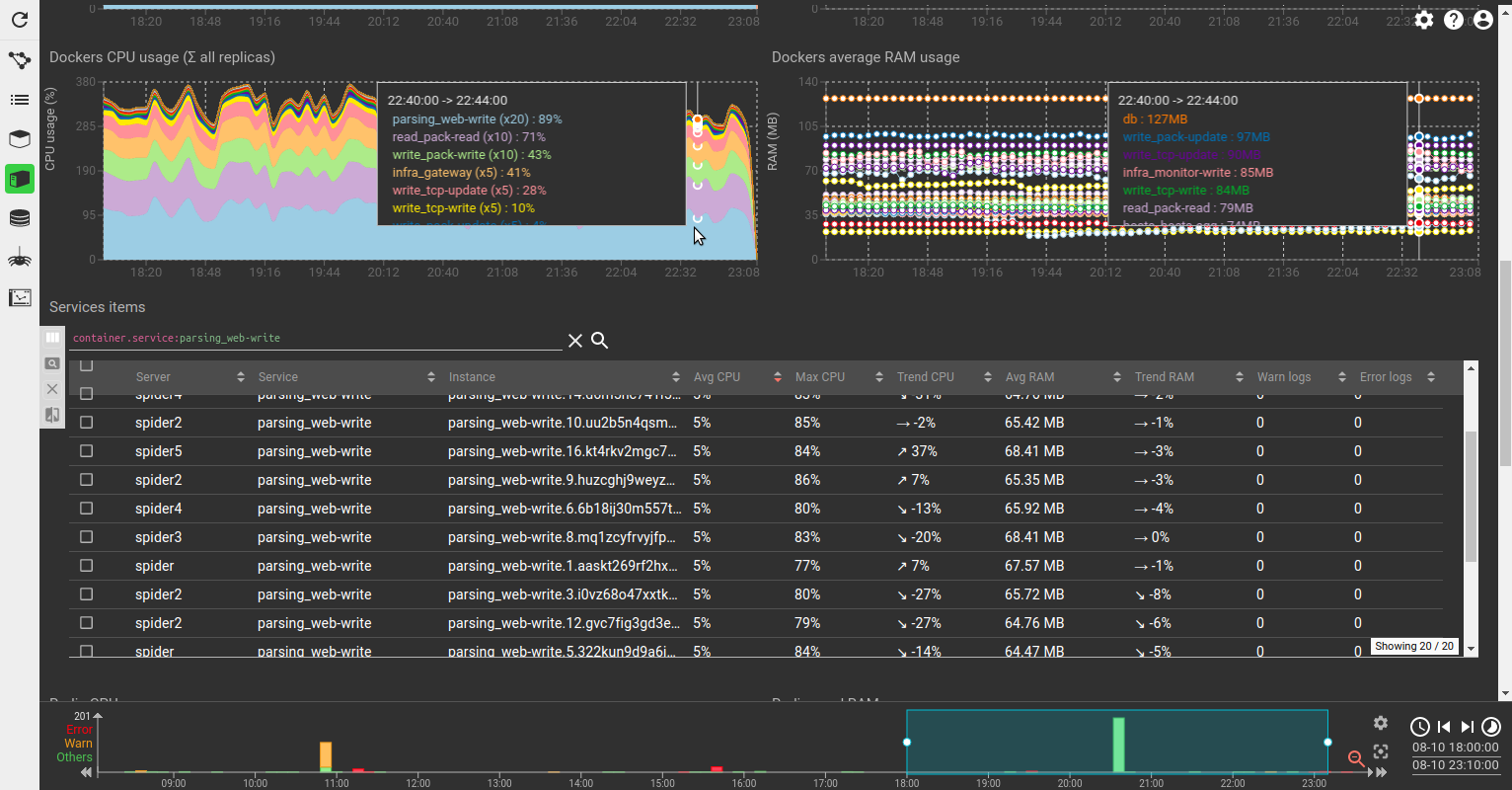

The result being not so much visible (apart in the code), I took the opportunity to introduce one feature while doing it: the dark mode. Often requested by users, and being facilitated by MUI theming :)

Here it is:

Dark mode can be activated in the settings.

... Now, let's get this work to NetworkView UI !