Using Elasticsearch asynchronous searches

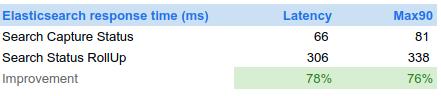

On May 15th, Elastic team released Elasticsearch 7.7 introducing asynchronous searches. A way to get partial results of a search request and to avoid waiting indefinitely for a complete result.

I saw there a way to improve User Experience while loading Spider, and to avoid the last timeouts that are still sometimes occurring when generating the Timeline or the Network map over many days.

So, here it is, 9 daysafter ES 7.7 release, the implementation of async searches in Spider is stable (I hope ;) ) and efficient!

Normal search

Async search

I stumble a bit at the beginning to find the right way to use this:

When to use partial results

Loading partial results while data were already present meant resetting the existing map or timeline. The result was ugly and disturbing. I decided to limite partial loads to initial load, whisperer switch, view switch... In other words... when the resultset is empty before searching.

API integration

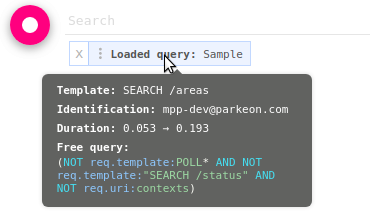

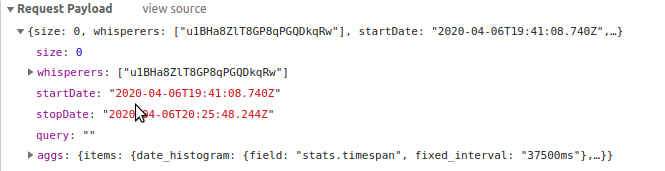

Although ES does not require clients to send again the query parameters to get the async search followup, Spider API does.

Indeed, the async final result may present a 'next' link to get the next page. This link is built as hypermedia and includes everything necessary to proceed easily to the next page.

As Spider is stateless, the client is required to send all request parameters for all async follow up, in order to allow Spider to build this 'next' hypermedia link. Spider makes it easy to comply with, by providing another hypermedia link with all parameters to get the async call follow up.

Client usage

I also tested several solutions to chain the calls in the client (UI) to finaly find that Elastic team made it really easy:

- You may define a timeout (wait_for_completion_timeout) to get the first partial results in the first query.

- If the results are avaiable before, you get them straight, as a normal search.

- In the other cases, you get a result with partial (or no) data.

- On further call, you may get the progress of the search straight... or also provide a timeout

The beauty of this is that you don't have a drawback in using or not async. If you use timeouts, you always get results when they are available. :)

At first, I implemented it so:

- Async search + timeout

- Wait 250ms after partial results

- Call follow-up

- Wait 250ms

- ...

But this method may lead you to get results later than they are available. Which is bad for a real time UI like Spider.

Using Timeouts in a clever way, you combine partial results, and ASAP response:

- Async search + timeout

- Call followup + timeout (no wait)

With this usage, as soon as the query is over, ES give you the results. And you may propose an incremental loading experience to your users.

Implementation results

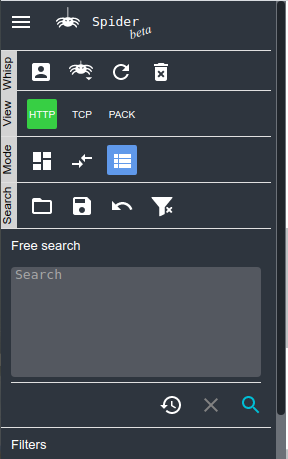

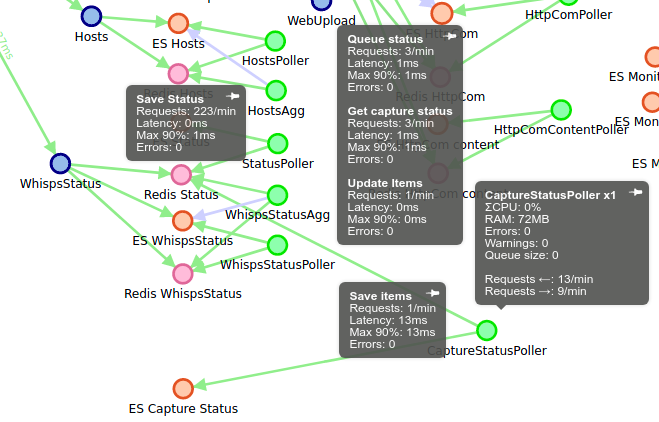

I implemented async search on UI for the following 'long' queries:

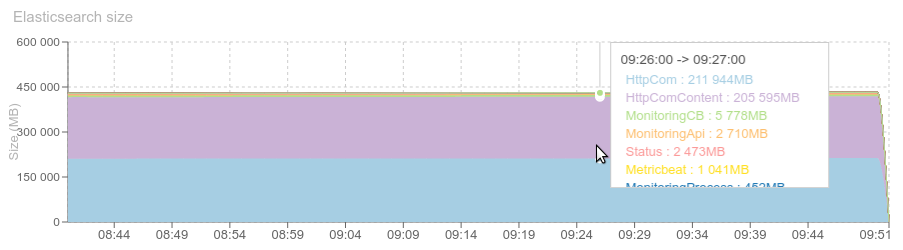

- Timeline loading

- Timeline quality loading

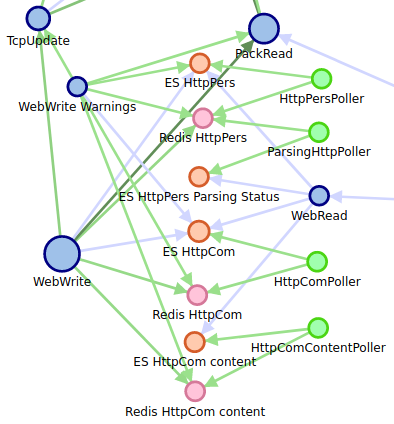

- Network map loading

- DNS records loading

On all 3 views (HTTP, TCP, Packet), with 1s timeouts.

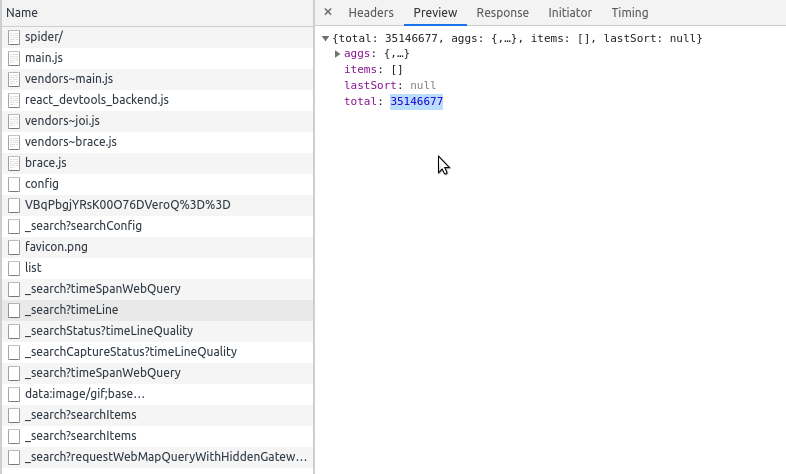

The effect is visible only when loading the full period with no filters. Indeed, other queries are way below 1s ;-) On automatic refresh, you won't see the change. The queries are not 'magically' faster: doing time based aggregation on 30 millions communications still takes... 4s :-O

As it is still new, I may have missed some stuff, so you may deactivate it in the Settings panel, to get back to normal search:

Share me your feelings ! :)