Spider was working really fine with Docker Swarm until... Docker version 18.06.

Docker 18.06 includes a change to improve scalability of Swarm Load Balancing: https://github.com/docker/engine/pull/16. The impact on Spider is so:

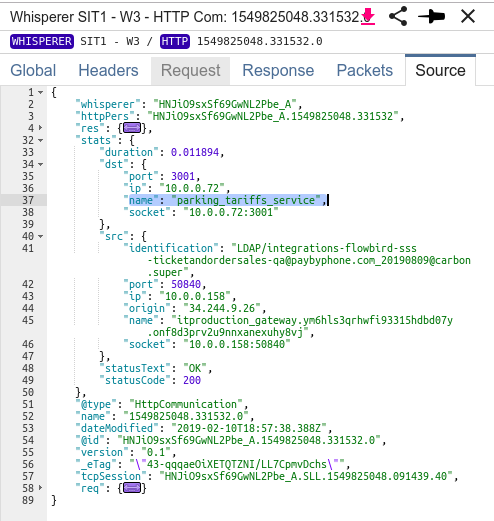

- Previously, when sniffing communications between services, the IP used were the IP of the replicas that sent/received the request (DSR mode).

- Now, the IPs used in the packets are the VIPs (NAT mode).

- The main issue is that... the VIPs have no PTR record in Swarm DNS, and so, the Whisperers cannot reverse resolve their names... And Spider is then much less usable.

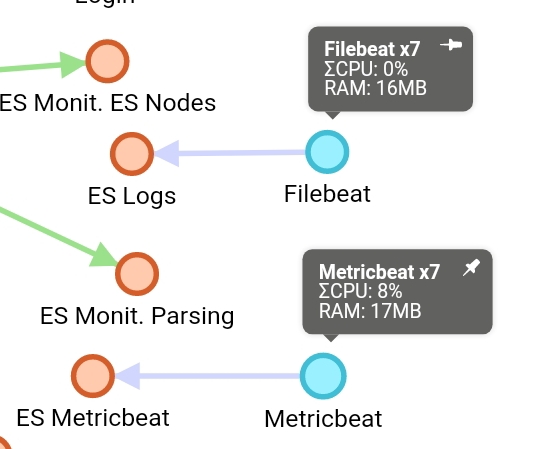

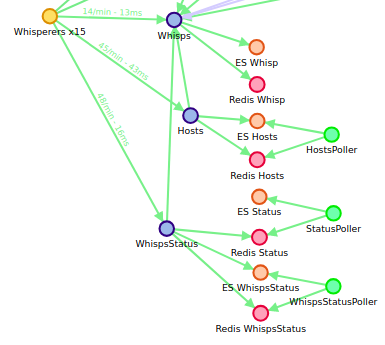

To overcome the problem, I added the possibility to give a list of Hostnames to the Whisperers that are resolved regularly against the DNS and preloaded inside the DNS resolving mecanism.

This has many advantages:

- You can define a list of hosts without PTR records.

- Docker resolving works better that reverse resolving (more stable: you don't face bug: )

- The list can be given in Whisperer config (UI) or through environment variables of the Whisperer: HOSTS_TO_RESOLVE

- Thus, you can script the Whisperer launch and get, at start, the list of services in the Swarm prior to launching.

This has a main drawback: you loose the power of service discovery... as the list is static. The other way would be to get the info by linking the Whisperer to the Docker socket... But this is a security risk, and would tie to much to Docker.

While at it, I added another environment variable : CONTAINER_NAME. When present:

- The list of local container own IPs are preloaded in the DNS resolving mecanism with as hostname value the CONTAINER_NAME value.

Docker 18.09 includes a parameter when creating the Overlay network to deactivate this new NAT feature and be like before: --opt dsr

With this parameter active, Swarm behavior is back to before 18.06, and Spider works like a charm. But at the cost of scalability.

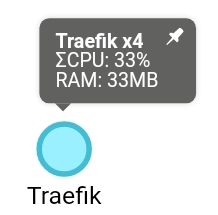

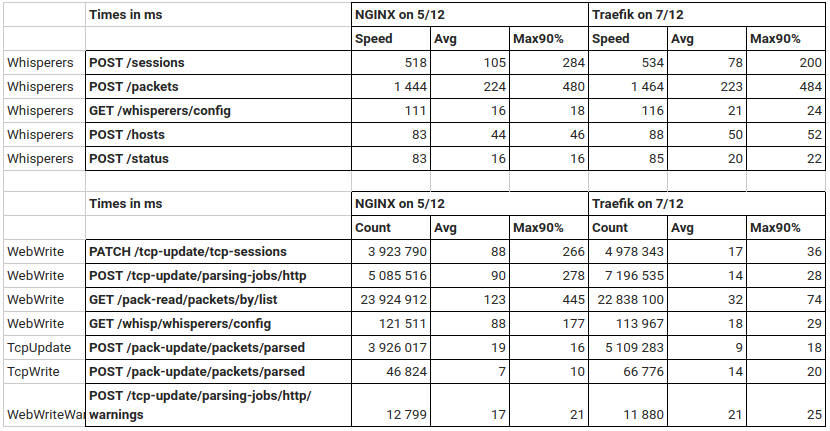

If scalability is a matter, while using Spider, the best is to move the cluster replica settings from VIP to DNSRR, and use a load balancer like Traefik ;) See my other post from today.