Resiliency of Spider system under load :)

Spider endured an unwanted Stress test this morning!

Thanks, it proved it behaved and allowed me to confirm one bug =)

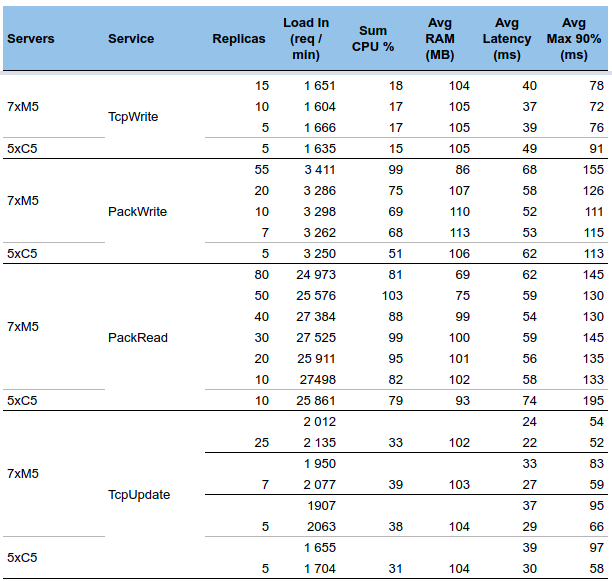

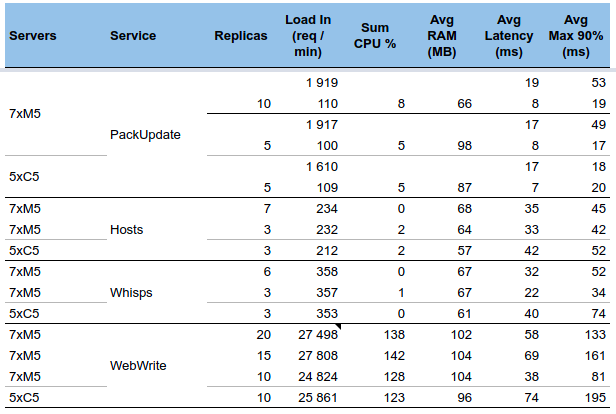

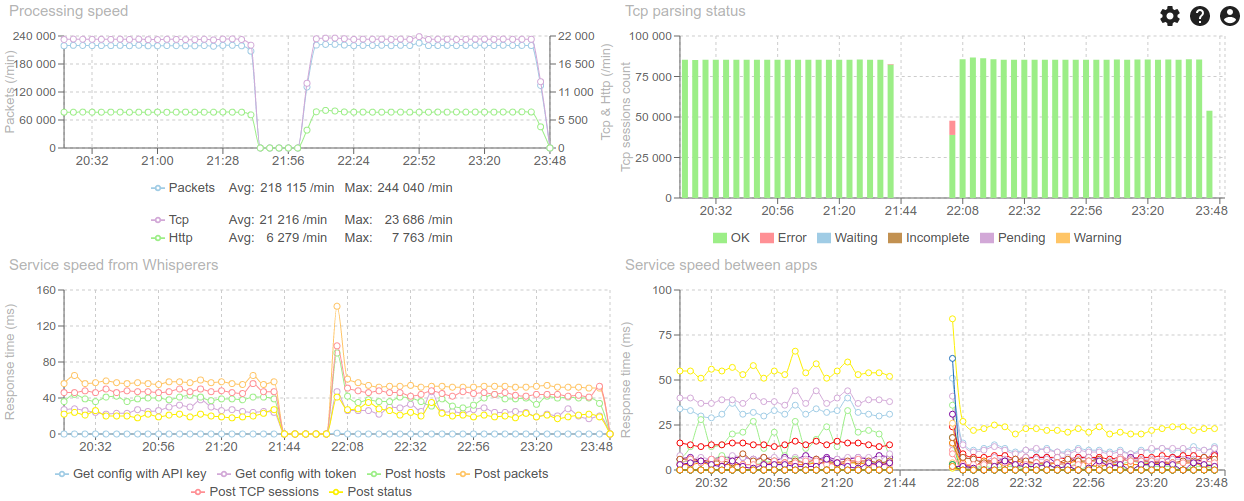

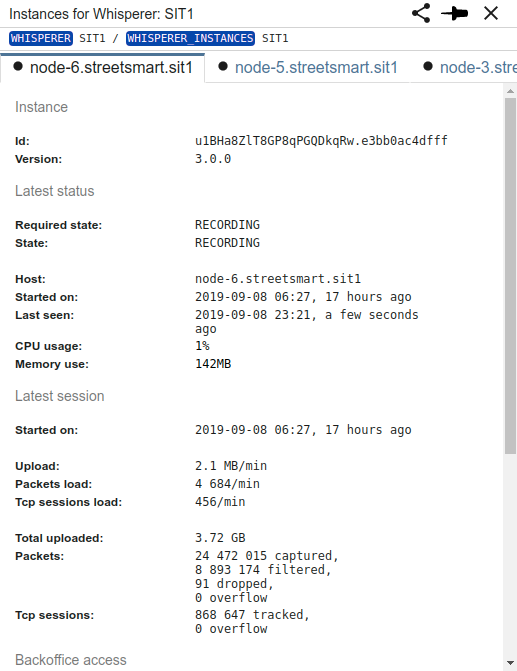

Look what I got from the monitoring:

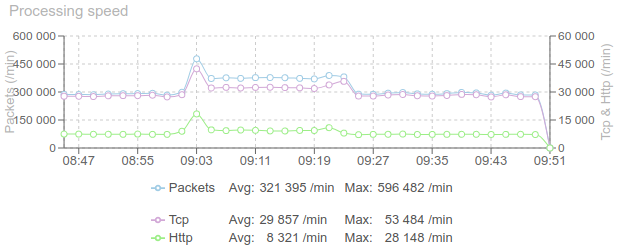

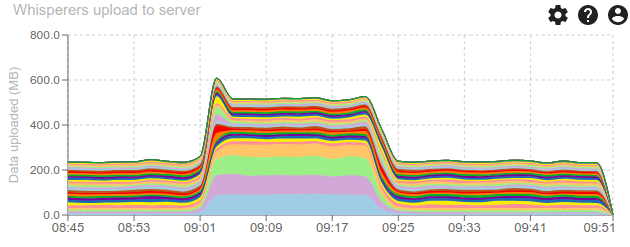

Load went from 290k/min to 380k/min between 9:00am to 9:20am:

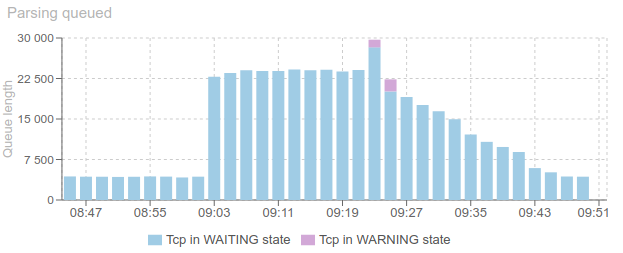

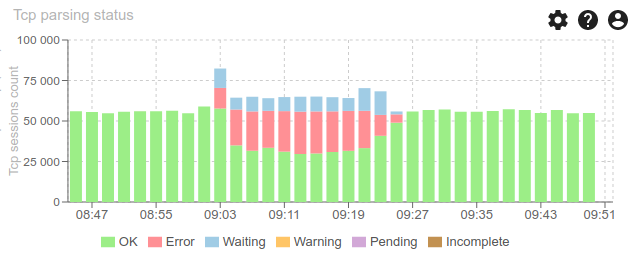

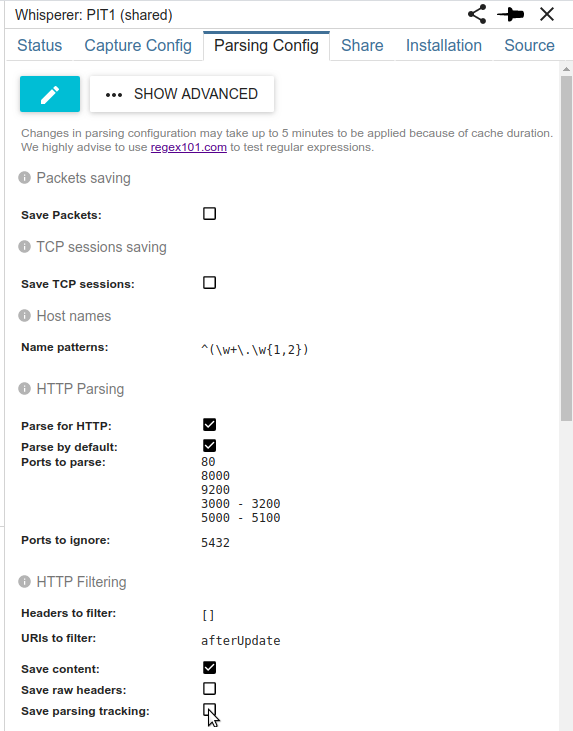

There were so many sessions at once to parsed that the parsing queue what hard to empty:

Thus generating many parsing errors, due to missing packets (short life time)

But all went back to normal afterwards :)

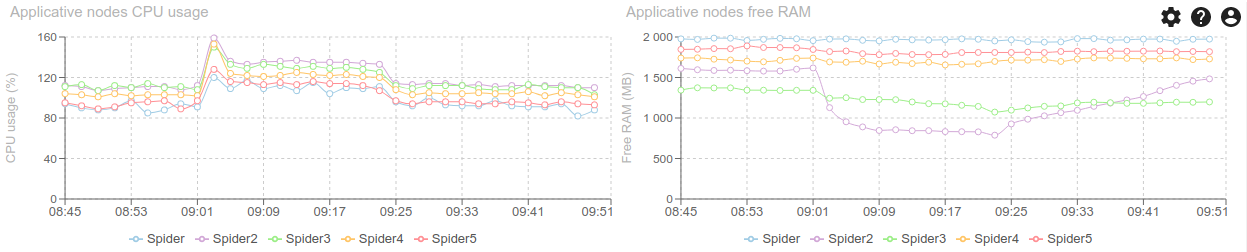

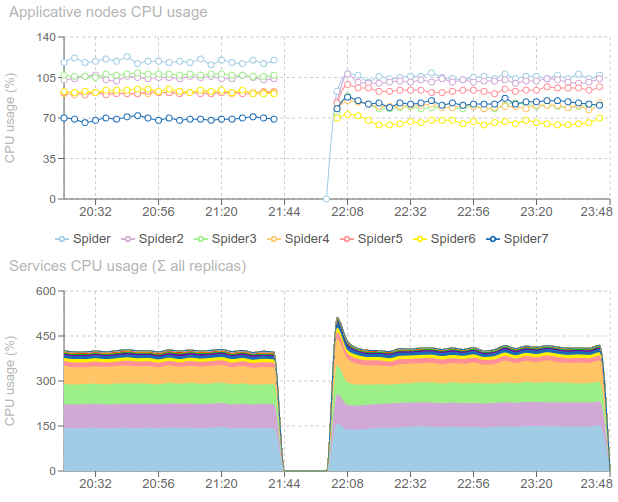

CPU and RAM were OK, so I bet the bottleneck was the configuration: limited number of parsers to absorb the load.

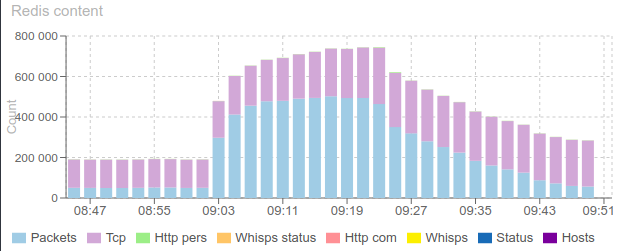

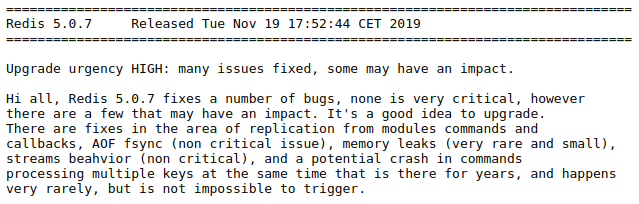

But there is a bug: Redis store does not get back to normal completely, some elements are staying in store and not deleted:

That will prevent the system from absorbing too many spikes like this in a row.

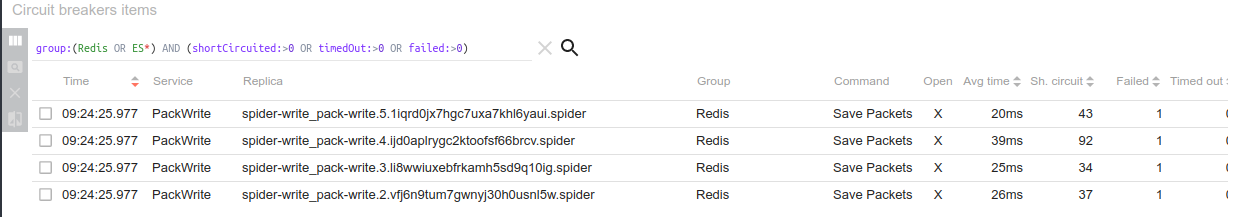

Also, some Circuit breakers to Redis opened during the spike (only 4...):

Under this load, the main two Redis instances were enduring 13k requests per second (26k/s combined), with a spike at 31k/s. For only ... 13% CPU each. Impressive!

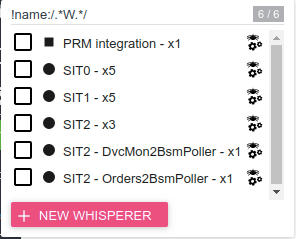

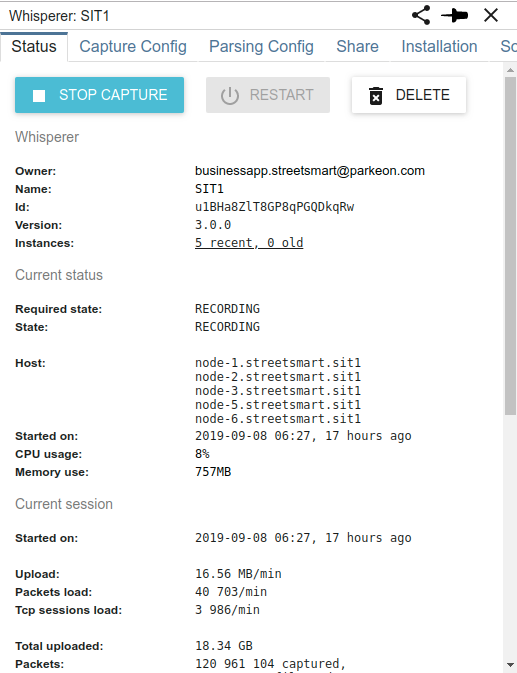

And all this is due to a load test in SPT1 (the 4 whisperers at the bottom)

Conclusion:

- System is resilient enough :)

- Observability is great and important !!

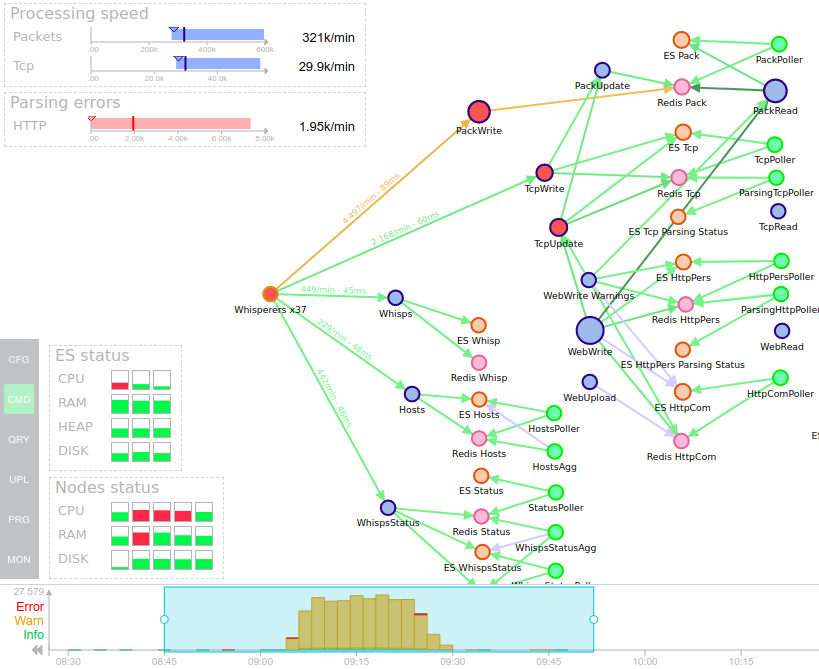

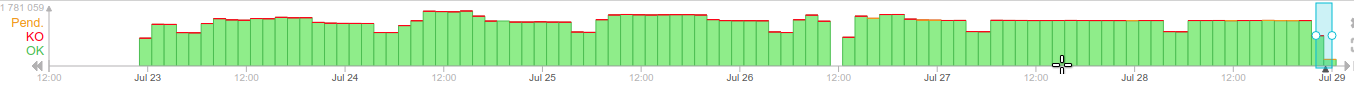

Bonus: the summary dashboard:

- A spike at 600k packets/min.

- Many warnings in logs showing something got bad.

- CPU on applicative node that went red for some time, and on an ES node as well.

- Errors on 3 services that may not be scaled enough for this load.

- And we see the circuit breakers errors due to Redis that suffered.

Really helpful!