High load capture

Sometimes you will use Spider on high load systems.

If Spider scales well, there are nonetheless attention points, and knowledge to have on how it scales.

Indeed some parts of Spider do not have autoscaling enable, even on Kubernetes.

Scaling Whisperers throughput

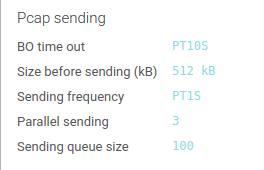

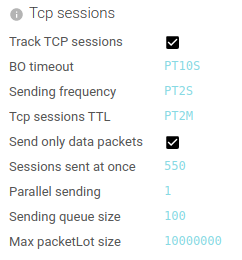

When needing to increase Whisperer throughput, you do it in Whisperer Capture Config tab, advanced settings:

- Increase parallel sending

- Decrease delay before sending

- Increase TCP sessions delay before sending

- Increase sessions parallel sending

Scaling Write Services

When injecting high volume in Spider, you need to scale write services in consequence (or let Kubernetes do it):

- Pack Write - Service that receives packets

- Tcp Write - Service that receives tcp sessions

pack-write:

replicas:

min: 5 # Increase min replicas (on Swarm)

tcp-write:

replicas:

min: 5

Scaling Redis

To absorb the load you might need to increase Redis maximum memory size.

stores:

redis:

instances:

redis-pack:

name: redis-pack

node: redis-pack

maxmemory: 1Gb #Increase max memory

redis-tcp:

name: redis-tcp

node: redis-tcp

maxmemory: 1Gb

Scaling Parsing Services

If parsing computing speed is not fast enough,

- the TCP sessions to parse may stack in the parsing queues

- the parsing delay increases

You may check these in the parsing dashboard.

The be able to increase parsing throughput, you need to increase:

- Web-Write - the parser

- Pack-Read - to access packets from Redis

- Tcp-Update - to fetch & update the TCP sessions

The most critical to scale are Web-Write and Pack-Read.

web-write:

replicas:

min: 7

pack-read:

replicas:

min: 7

tcp-update:

replicas:

min: 3

When using Kubernetes, Spider scales automatically.

Scaling Pollers

When parsing is over, the communications are saved in Redis to be then polled by Pollers to save in Elasticsearch.

Pollers may need scaling when the load is really high.

Pollers may be scaled to ways:

- By increasing replicas

- By increasing threads

Threads are in fact asynchronous workers that are going to poll in parallel.

Scaling threads can increase polling throughput without increase RAM resource usage, but scaling replicas scales better.

Many pollers are concerned by parsing:

- pack-poller - Packets serializing

- tcp-poller - TCP serializing

- web-httpcom-poller - HTTP communications metadata serializing

- web-httpcom-content-poller - HTTP communications content serializing

- web-httppers-poller - HTTP parsing log serializing

pack-poller:

replicas:

min: 1

threads: 1

tcp-poller:

replicas:

min: 1

threads: 1

web-httpcom-poller:

replicas:

min: 1

threads: 1

web-httpcom-content-poller:

replicas:

min: 1

threads: 1

web-httppers-poller:

replicas:

min: 1

threads: 1

Scaling Elasticsearch indexing power

Pollers are sending data in bulk to Elasticsearch for indexing.

Elasticsearch is using threads for indexing. As many threads as the host cores (real or allocated)

Reference:

- https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-managing-compute-resources.html

- https://www.elastic.co/guide/en/elasticsearch/reference/current/modules-threadpool.html#node.processors

Optimizing indices for write

operations indices, storing communications data, are time based.

- The write index has a duration / size defined by the

rolloversetting. - The write index is sharded to allow parallel indexing in many nodes (split by Whisperers)

operations:

shards: 3

ttl: 6d

rollover: 1d

- Reduce the

rolloverparameter to reduce the size of the write index, making it easier to manage for Elasticsearch- You should keep this index to a maximum of a few GBs.

- Increase the

shardsparameter to increase parallel indexing of communications. However:- Keep the shards settings lower than the number of Elasticsearch nodes

- Increase it only if you have many Whisperers to balance the load. Indeed, shards are routed by Whisperers

@id.

Shard routing option

Spider has an option to route the data in shards based on the whisperer field of resources.

In the setup file, the option is called tuning.useWhispererRoutingWithES.

When active,

- The resources are stored in a specific shard computed from a hash of the whisperer id.

- Searching is made directly to these shards.

This accelerates searching, to the expense of limiting indexing to the nodes hosting the primary shards concerned.

When you have a limited cardinality of whisperers, but with still a high load, you may want to distribute your indexing across more nodes than 1 by whisperer.

It is possible by setting this tuning option to false. It will then distribute resources to all shards evenly.

This option:

- Should be active when you have many whisperers (more than shards count) with even load.

- Should be inactive when you have only a limited count of whisperers with a high load and when you want to scale Elasticsearch horizontally and not vertically.

You may go from active (true) to inactive (false) without issue. As searches will then be done on all shards.

But when switching from inactive to active, the next searches won't find all data in the latest shards... since searches will focus only on 1 shard and not on all 😕